Powerful Video Creation on a Mid-Level Laptop Using WAN 2.1 Locally (1.3B Model )

Introduction

Think you need a high-end PC to create stunning AI-generated videos Locally in your own system ?

If you’re a content creator, or indie developer stuck with a mid-level laptop, you’ve probably felt the frustration—lagging tools, long render times, or simply being left out of the AI video revolution.But here’s the game-changer: WAN 2.1 (1.3B model) is proving that powerful text-to-video generation isn’t just for those with monster GPUs. With the right setup, even a modest laptop with a decent GPU can start producing impressive, creative visuals for games, stories, or YouTube content.

In this post, you’ll learn exactly how WAN 2.1 works, what makes it efficient on mid-tier hardware, and how you can use it to generate high-quality game footage or animations—without upgrading your entire rig.

Table of Contents

- What is WAN 2.1 (1.3B Model)?

- Overview of the Model

- Key Features & Capabilities

- Text-to-Video Explained

- System Requirements & Optimal Setup

- Minimum Hardware Needed

- Ideal GPU Specs for Smooth Performance

- Installing and Running WAN 2.1 on a Mid-Level Laptop

- Step-by-Step Installation Guide

- Troubleshooting Common Issues

- Creating High-Quality Videos: Workflow Guide

- Writing Effective Prompts for Video Generation

- Speed vs. Quality: Performance Settings Explained

- Use Cases for Gamers and Content Creators

- YouTube Shorts & Social Media Clips

- Concept Prototypes for Indie Developers

- Performance Benchmarks on Mid-Range GPUs

- Real Test Results on GTX/RTX Series Laptops

- Tips to Maximize Output with Limited Resources

- GPU Optimization Tricks

- Managing Thermal Throttling on Laptops

- Using Cloud-Assists or Hybrid Processing

- Future Potential of WAN 2.1 for Everyday Creators

- Upcoming Features

- How It Compares to Heavier Models

- Scalability for Pro-Level Use

- Conclusion

- Summary of Key Takeaways

- Final Thoughts for Mid-Level Creators

- Where to Go Next

What is WAN 2.1 (1.3B Model)

WAN 2.1 (1.3B Model) is a lightweight, AI-powered text-to-image and video generation model designed by Tsinghua University and the Beijing Academy of Artificial Intelligence (BAAI). With 1.3 billion parameters, it delivers a powerful mix of speed and visual quality, optimized to run on mid-level laptops with GPUs. This model is ideal for gamers, digital artists, and content creators looking to generate high-quality visuals—like game scenes, animations, or storyboards—without needing a high-end machine.

Key Features & Capabilities of WAN 2.1 (1.3B Model)

💡 Lightweight Design (1.3B Parameters)

WAN 2.1 is optimized for performance with just 1.3 billion parameters—delivering high-quality image and video outputs without overwhelming your system, making it ideal for mid-range GPUs and laptops.

🖼️ Text-to-Image & Video Generation

Transforms written prompts into detailed images or short video clips, useful for gaming visuals, animation drafts, or creative content generation.

⚙️ Runs on Mid-Tier Hardware

Unlike larger models that require high-end GPUs or cloud access, WAN 2.1 can run locally on consumer laptops with NVIDIA GTX/RTX graphics cards, offering creative power on a budget.

🚀 Fast Inference Speed

Thanks to its compact architecture, WAN 2.1 delivers faster generation times—ideal for quick iterations, prototyping, and real-time creative workflows.

🎮 Game Asset Creation Ready

Perfect for generating game concept art, NPC avatars, environmental assets, and animated cutscene drafts—especially helpful for indie developers and modders.

📐 Prompt-Responsive Generation

WAN 2.1 supports detailed prompts and offers a high degree of control over output style, object positioning, and scene composition, enabling precise visual storytelling.

📦 Open-Source Accessibility

Freely available and maintained by Tsinghua University and BAAI, making it easy to integrate into custom pipelines, modding tools, or creative apps.

🌍 Multilingual Prompt Support

Supports multiple languages for text input, allowing global creators to work in their native language with consistent visual results.

🔄 Video Consistency Across Frames

While lightweight, WAN 2.1 includes basic frame-to-frame coherence, allowing for short, smooth animated clips with minimal artifacts.

🛠 Developer-Friendly APIs

Offers integration flexibility for developers building game tools, design platforms, or AI-enhanced creative workflows.

🎞️ Text-to-Video Explained (WAN 2.1 – 1.3B Model)

Text-to-video generation is the process of converting written descriptions into short animated clips or motion visuals. With WAN 2.1, you can input a simple or detailed prompt like “a futuristic city skyline at sunset”, and the model will generate a sequence of frames that simulate video—bringing your idea to life without the need for manual animation. You can Try Online here

🔤 How It Works:

- The model breaks down your text into visual elements.

- It then generates multiple frames in a consistent sequence, trying to maintain visual coherence over time.

- The output is usually a short clip (e.g., 2–4 seconds), ideal for concept demos, cutscenes, or storyboarding.

🖼️ Image-to-Video: Add Motion to Static Visuals

WAN 2.1 also supports image-to-video generation, where you can feed a static image (e.g., concept art or character portrait), and the model attempts to animate it by predicting motion or transitions across frames.

⏳ Performance Note:

While this feature is powerful, image-to-video generation can be slow, especially on mid-range laptops. Because the model has to analyze fine visual details and simulate believable motion across frames, the process can:

- Take several minutes per clip, depending on resolution and GPU power.

- Be resource-intensive, sometimes requiring memory management or GPU throttling on laptops.

🧱 Minimum Hardware Needed (to Run WAN 2.1)

If you’re looking to simply run the model (text-to-image or basic video generation), here’s the bare minimum:

- CPU: Intel i5 (8th Gen or newer) / AMD Ryzen 5 or better

- RAM: 8 GB (16 GB strongly recommended)

- GPU:

- NVIDIA GTX 1650 / 1660 (4GB VRAM minimum)

- Or AMD Radeon RX 560 or better

- Storage: 10–15 GB free (for model files, output, and dependencies)

- OS: Windows 10/11, Linux, or macOS (M-series with limitations)

⚠️ On minimum hardware, expect slower generation times (especially for video), higher VRAM usage, and limited resolution.

🚀 Ideal GPU Specs for Smooth Performance

To get fast, smooth, and reliable results, especially for text-to-video and image-to-video tasks, aim for:

- GPU:

- NVIDIA RTX 3060 / 4060 (Laptop or Desktop)

- 6GB–8GB VRAM (8GB preferred for video generation)

- CPU: Intel i7 / Ryzen 7 (or Apple M1 Pro/M2 chip with workarounds)

- RAM: 16–32 GB

- SSD: NVMe recommended for fast loading and caching

- Cooling: External cooling or laptop fan base (for longer sessions)

🧠 Tip: WAN 2.1 is efficient, but text-to-video tasks still demand solid VRAM. Avoid multitasking while generating to prevent memory crashes.

🖼️ Installing and Running WAN 2.1 on a Mid-Level Laptop Using ComfyUI

✅ What is ComfyUI?

ComfyUI is a powerful, modular graph-based UI for running and experimenting with diffusion models (including text-to-image and video generation) locally. It’s highly optimized and user-friendly, especially when dealing with custom workflows like WAN 2.1.

🔧 System Requirements Recap

- GPU: NVIDIA GTX 1650 / RTX 2060 or better (4–8 GB VRAM)

- RAM: 16 GB recommended

- OS: Windows/Linux

- Python 3.10 + CUDA/cuDNN (matching your PyTorch version)

Step 1: Download Comfy-UI

✅ Pre-Built Package (Windows Only)

If you’re using a Windows system with an NVIDIA GPU, you can download the pre-built package:

- Download Link: ComfyUI Windows Pre-Built PackageComfy UI

Installation Steps:

- Run the application:

- Once launched, open your browser and go to

http://127.0.0.1:8188to access the ComfyUI interface.

Step 2: Download Model, other Material from Hugging Face from links

🧩 Required Components for ComfyUI Integration

To integrate WAN 2.1 into ComfyUI, you’ll need several components and download these files in your System

- WAN 2.1 Model: Wan2_1-T2V-1_3B_fp32.safetensors · Kijai/WanVideo_comfy at main

- Text Encoder: umt5-xxl-enc-fp8_e4m3fn.safetensors · Kijai/WanVideo_comfy at main

- VAE Model: Comfy-Org/Wan_2.1_ComfyUI_repackaged at main

- CLIP Vision Model: Comfy-Org/Wan_2.1_ComfyUI_repackaged at main

Step 3: Place the model in the location as shown

After downloading the models, place them in the appropriate directories within your ComfyUI installation: more details

- WAN 2.1 Model:

ComfyUI/models/diffusion_models/Wan2_1-T2V-1_3B_fp32.safetensors - Text Encoder:

ComfyUI/models/text_encoders/umt5-xxl-enc-fp8_e4m3fn.safetensors - VAE Model:

ComfyUI/models/vae/Vae - CLIP Vision Model:

ComfyUI/models/clip_vision/clip_vision_h

For a comprehensive guide on setting up WAN 2.1 in Comfy-UI, refer to the ComfyUI WAN 2.1 Integration Guide. GitHub+1GitHub+1

Step 4: Restart Comfy-UI

Close Interface and start Again

Step 5: Download Workflow and Drop in Comfy-UI

Download Workflow.json for:

Workflow Text to video and Image to video Drag and Drop in Comf-UI Interface the flowing .json File

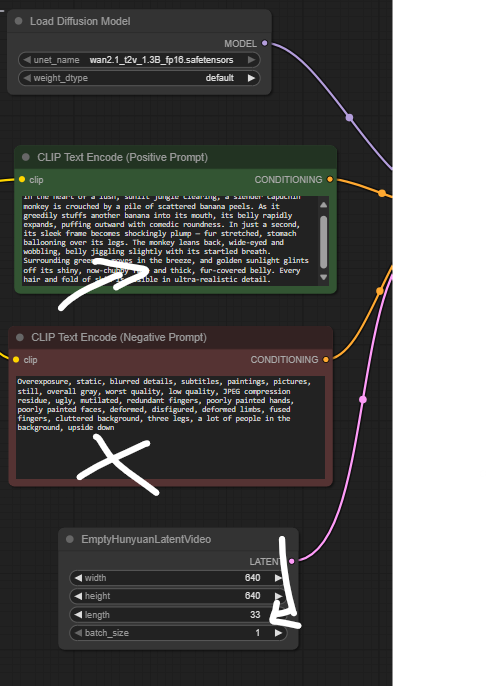

Step 6: Enter Prompt and Generate Video

Enter your desire Prompts such i have entered: like these videos

🚀 Performance Tips for Mid-Level Laptops

- Keep resolution at 512×512 for smoother runs

- Use 16 frames max for video unless you have 8GB+ VRAM

- Load the models accordingly in the Nodes and Enter the Text

- To Increase the time of video Change EmptyHunyuanLatentVideo Properties to changes the length and height and change the length to change from 2 second to max 5 second generation

- Don’t change the Negative prompt

🏁 Conclusion

WAN 2.1 (1.3B Model) is a breath of fresh air for creators working with limited hardware. It proves that high-quality, AI-powered video generation is no longer the exclusive domain of those with high-end GPUs or expensive cloud setups. Whether you’re a solo game developer, digital storyteller, or YouTuber with a mid-range laptop, WAN 2.1 offers a realistic and powerful tool to bring your ideas to life.

By leveraging its lightweight design, efficient performance, and ComfyUI integration, you can turn simple prompts into animated visuals—without breaking the bank or melting your system. While it’s not without limitations, smart optimization and a clear workflow make it very achievable to produce compelling content right from your desk.

So if you’ve been on the sidelines of the AI video wave because of hardware constraints, now’s your time to dive in. Explore, experiment, and unleash your creativity—your laptop is more capable than you think.

Have questions or need help setting it up on your own laptop?

Feel free to ask in the comments or reach out—I’m happy to help you get started with WAN 2.1 and unlock your creative potential!