🧩PartCrafter Explained: How Latent Diffusion Transformers Image Are Shaping 3D Mesh Creation with multiple parts

🧠 Introduction: The Evolution of 3D Mesh Generation

The realm of 3D modeling has witnessed significant advancements over the past few years, transitioning from manual, labor-intensive processes to more automated and intelligent systems. Traditional methods of 3D mesh generation often involved complex workflows, including manual segmentation, meshing, and texturing. These processes were not only time-consuming but also required a high level of expertise.

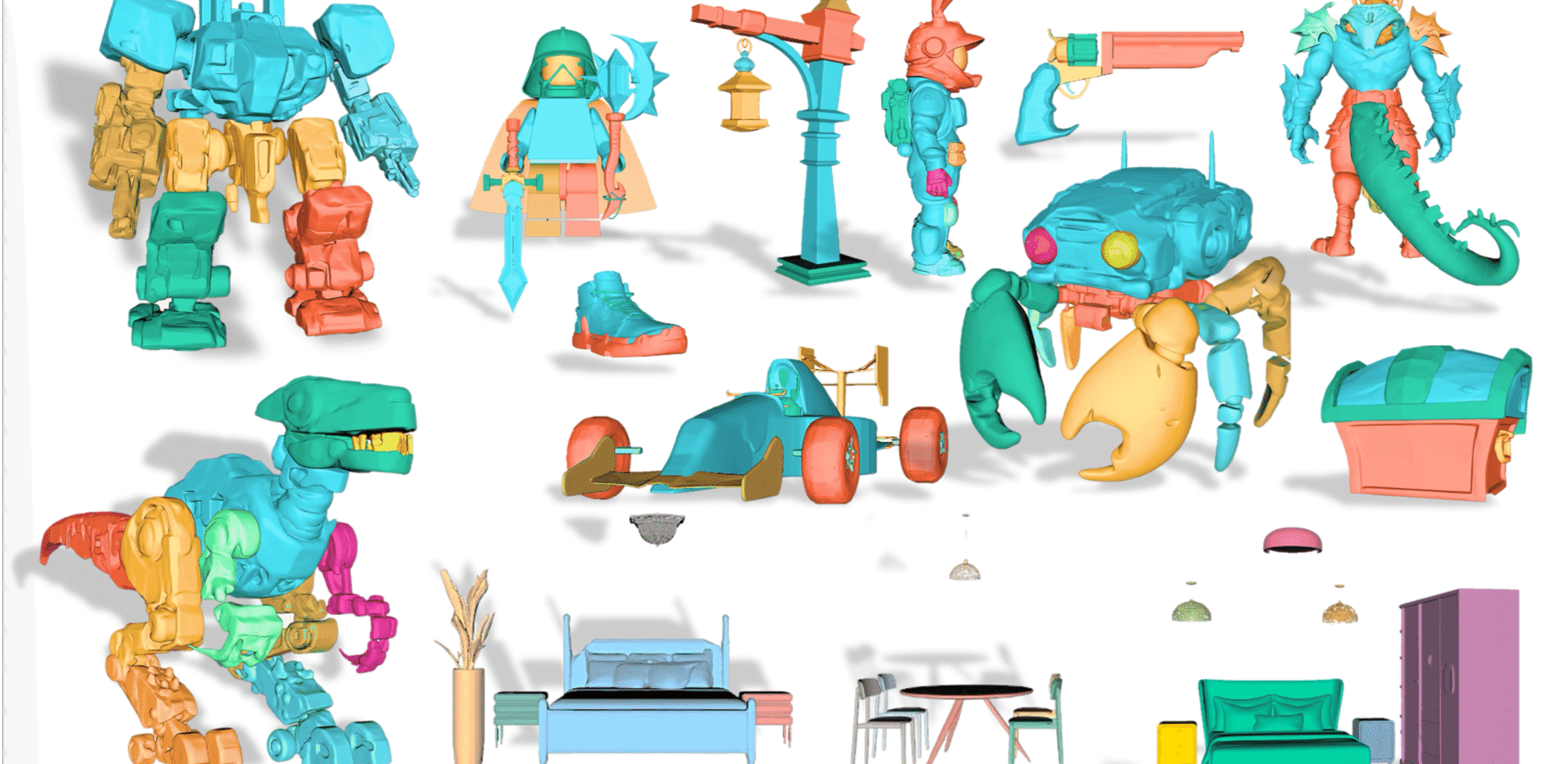

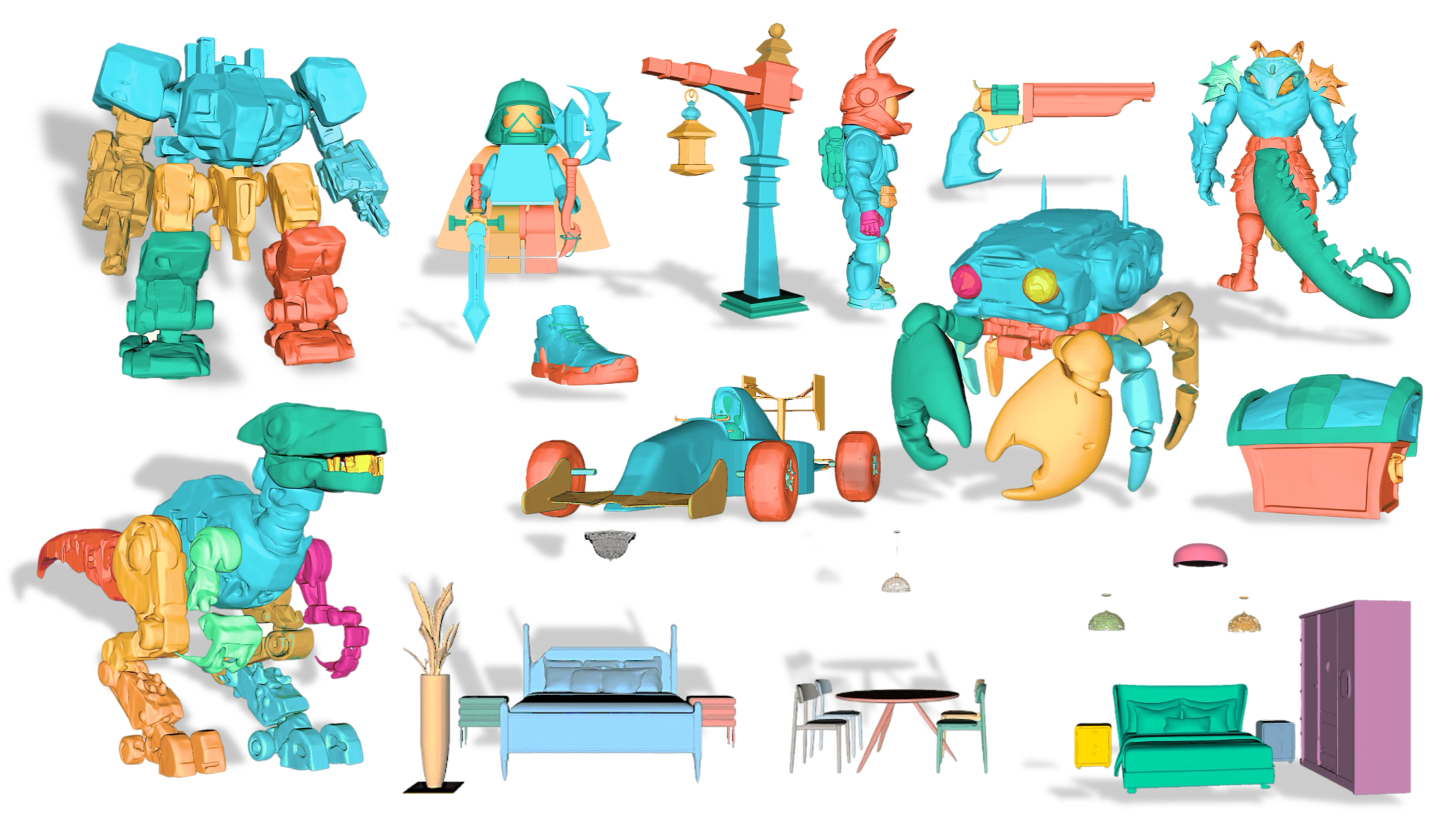

Enter PartCrafter, a groundbreaking model introduced by Lin et al. in June 2025. PartCrafter revolutionizes the 3D modeling landscape by enabling the generation of structured 3D meshes directly from a single RGB image. Unlike its predecessors, which either produced monolithic 3D shapes or relied on two-stage pipelines (segmentation followed by reconstruction), PartCrafter adopts a unified, compositional generation architecture. This approach eliminates the need for pre-segmented inputs, allowing for the simultaneous denoising of multiple 3D parts conditioned on a single image

At its core, PartCrafter leverages the power of Latent Diffusion Transformers (LDTs), a class of generative models that operate in a compressed latent space. This design choice significantly reduces computational requirements while maintaining high-quality outputs. By employing a diffusion process in the latent space, PartCrafter can iteratively refine the 3D mesh, ensuring both global coherence and local detail.

One of the standout features of PartCrafter is its ability to generate semantically meaningful and geometrically distinct parts from a single image. This capability is particularly beneficial for applications in gaming, virtual reality, and 3D printing, where assets often consist of multiple components that need to be manipulated independently.

In the following sections, we will delve deeper into the technical innovations behind PartCrafter, explore its applications, and discuss its potential impact on the future of 3D mesh generation.

🔧 Key Innovations of PartCrafter

PartCrafter introduces two groundbreaking innovations that set it apart in the realm of 3D mesh generation:

1. Compositional Latent Space

Traditional 3D generative models often treat objects as monolithic entities, lacking the granularity needed for detailed manipulation. PartCrafter addresses this by employing a compositional latent space, where each 3D part is represented by a set of disentangled latent tokens. This approach ensures clear semantics and editing flexibility between parts, allowing for:

- Independent Manipulation: Each part can be modified or replaced without affecting others.

- Enhanced Semantics: Latent tokens capture the inherent structure and function of each part.

- Improved Editing: Facilitates precise adjustments and refinements to individual components.

This innovation enables the generation of semantically meaningful and geometrically distinct parts from a single image, enhancing the capabilities of AI-driven 3D modeling.

2. Hierarchical Attention Mechanism

To maintain coherence and detail across multiple parts, PartCrafter integrates a hierarchical attention mechanism. This mechanism processes information flow both within individual parts and across all parts simultaneously, ensuring:

- Global Coherence: Maintains consistency in the overall structure and appearance.

- Local Detail Preservation: Ensures intricate details are retained within each part.

- Efficient Processing: Balances computational efficiency with high-quality output.

By enabling structured information flow, this mechanism guarantees that the generated 3D model is both globally consistent and locally detailed, even when parts are not directly visible in the input image.

🛠️ Technical Overview of PartCrafter

PartCrafter is a state-of-the-art generative model designed to synthesize structured 3D meshes from a single RGB image. It achieves this by leveraging advancements in latent diffusion models and transformer architectures.

1. Compositional Latent Space

PartCrafter introduces a compositional latent space where each 3D part is represented by a set of disentangled latent tokens. This design allows for:

- Independent Manipulation: Each part can be modified or replaced without affecting others.

- Enhanced Semantics: Latent tokens capture the inherent structure and function of each part.

- Improved Editing: Facilitates precise adjustments and refinements to individual components.

This approach ensures clear semantics and editing flexibility between parts, enabling the generation of semantically meaningful and geometrically distinct parts from a single image.

2. Hierarchical Attention Mechanism

To maintain coherence and detail across multiple parts, PartCrafter integrates a hierarchical attention mechanism. This mechanism processes information flow both within individual parts and across all parts simultaneously, ensuring:

- Global Coherence: Maintains consistency in the overall structure and appearance.

- Local Detail Preservation: Ensures intricate details are retained within each part.

- Efficient Processing: Balances computational efficiency with high-quality output.

By enabling structured information flow, this mechanism guarantees that the generated 3D model is both globally consistent and locally detailed, even when parts are not directly visible in the input image.

3. Architecture and Training

PartCrafter builds upon a pretrained 3D mesh diffusion transformer (DiT) trained on whole objects, inheriting the pretrained weights, encoder, and decoder. It introduces two key innovations:

- Compositional Latent Space: Each 3D part is represented by a set of disentangled latent tokens.

- Hierarchical Attention Mechanism: Enables structured information flow both within individual parts and across all parts.

To support part-level supervision, PartCrafter curates a new dataset by mining part-level annotations from large-scale 3D object datasets. Experiments show that PartCrafter outperforms existing approaches in generating decomposable 3D meshes, including parts that are not directly visible in input images

| Input | MIDI | Ours |

| ||

| ||

| ||

| ||

| ||

| ||

| ||

| ||

|

📚 BibTeX 📚

If you find our work helpful, please consider citing:

@misc{lin2025partcrafter,

title={PartCrafter: Structured 3D Mesh Generation via Compositional Latent Diffusion Transformers},

author={Yuchen Lin and Chenguo Lin and Panwang Pan and Honglei Yan and Yiqiang Feng and Yadong Mu and Katerina Fragkiadaki},

year={2025},

eprint={2506.05573},

url={https://arxiv.org/abs/2506.05573}

}Website template is borrowed from Nerfies. Thanks for their effort.

🛠️ Applications of PartCrafter

PartCrafter’s ability to generate structured 3D meshes from a single RGB image opens up a multitude of possibilities across various industries. Below are some key applications:

1. 3D Printing

PartCrafter simplifies the process of creating 3D printable models by enabling the generation of complex, multi-part objects directly from images. This capability is particularly beneficial for rapid prototyping and custom manufacturing, where traditional modeling methods can be time-consuming and labor-intensive. By automating the creation of 3D meshes, PartCrafter accelerates the design-to-print workflow, allowing for quicker iterations and more efficient production cycles.

2. Augmented Reality (AR) and Virtual Reality (VR)

In AR and VR applications, realistic 3D models are essential for immersive experiences. PartCrafter facilitates the generation of detailed 3D assets from simple images, which can be integrated into AR and VR environments. This capability enhances the realism of virtual objects and environments, providing users with more engaging and interactive experiences.

3. Video Game Asset Creation

Game developers often require a vast array of 3D assets to populate virtual worlds. PartCrafter streamlines this process by generating diverse 3D models from single images, reducing the time and effort needed to create assets manually. This efficiency allows developers to focus more on gameplay and narrative elements, while still populating their games with rich, detailed environments.

4. E-commerce and Product Visualization

For online retailers, providing customers with detailed 3D views of products can enhance the shopping experience and increase conversion rates. PartCrafter enables the creation of 3D models from product images, allowing customers to interact with products virtually before making a purchase. This virtual try-before-you-buy approach can lead to higher customer satisfaction and reduced return rates.

5. Robotics and Autonomous Systems

In robotics, understanding and interacting with the physical environment is crucial. PartCrafter’s ability to generate 3D models from images aids in creating accurate representations of objects, which can be used for object recognition, manipulation planning, and navigation tasks. This capability enhances the efficiency and effectiveness of robotic systems in performing complex tasks.

🔍 PartCrafter vs. Leading 3D Mesh Generation Models

1. PartCrafter

- Architecture: Compositional Latent Diffusion Transformers

- Strengths:

- Generates multiple semantically meaningful and geometrically distinct parts from a single RGB image.

- Utilizes a compositional latent space with disentangled latent tokens for each 3D part.

- Incorporates a hierarchical attention mechanism for high coordination in local details and global consistency.

- Ideal For: Creating complex 3D models with multiple parts from single images.

2. 3DGen

- Architecture: Triplane Latent Diffusion

- Strengths:

- Combines a triplane VAE for latent representations with a conditional diffusion model for generation.

- Achieves high-quality textured and untextured 3D meshes across diverse categories.

- Operates efficiently on a single GPU.

- Ideal For: High-quality 3D mesh generation with texture details.

3. DiT-3D

- Architecture: Plain Diffusion Transformers

- Strengths:

- Operates directly on voxelized point clouds using plain Transformers.

- Incorporates 3D positional and patch embeddings for adaptive input aggregation.

- Achieves state-of-the-art performance in high-fidelity and diverse 3D point cloud generation.

- Ideal For: High-quality 3D shape generation from point clouds.

4. OctFusion

- Architecture: Octree-based Diffusion Model

- Strengths:

- Utilizes an octree-based latent representation combined with a multi-scale U-Net diffusion model.

- Generates 3D shapes with arbitrary resolutions in seconds.

- Supports generation conditioned on text prompts, sketches, or category labels.

- Ideal For: Fast and flexible 3D shape generation with high-quality outputs.

5. Magic3D

- Architecture: Two-stage Optimization Framework

- Strengths:

- First obtains a coarse model using a low-resolution diffusion prior.

- Further optimizes to a textured 3D mesh model with a high-resolution latent diffusion model.

- Achieves high-resolution 3D mesh creation faster than previous methods.

- Ideal For: High-resolution text-to-3D content creation.

📊 Comparative Overview

| Model | Architecture | Strengths | Ideal For |

|---|---|---|---|

| PartCrafter | Compositional Latent Diffusion | Multi-part generation from single images | Complex 3D models with multiple parts |

| 3DGen | Triplane Latent Diffusion | High-quality textured mesh generation | Detailed 3D meshes across categories |

| DiT-3D | Plain Diffusion Transformers | High-fidelity point cloud generation | Diverse 3D shape generation from point clouds |

| OctFusion | Octree-based Diffusion Model | Fast, high-quality 3D shape generation | Efficient 3D shape generation with flexibility |

| Magic3D | Two-stage Optimization Framework | High-resolution 3D mesh creation | Text-to-3D content creation |

Conclusion: Each model offers unique strengths tailored to specific applications in 3D mesh generation. PartCrafter stands out for its ability to generate complex 3D models with multiple parts from a single image, making it ideal for applications requiring detailed and semantically rich 3D content.

🖥️ Hardware Requirements

Given the complexity of PartCrafter’s architecture, optimal performance is achieved with high-end hardware.

Recommended Specifications:

- CPU: Intel Core i9 (12th Gen or later) or AMD Ryzen 9 series

- GPU: NVIDIA GeForce RTX 4090 or equivalent with at least 24 GB VRAM

- RAM: 64 GB DDR5

- Storage: 1 TB NVMe SSD (PCIe Gen 4 or 5)

- Operating System: Windows 11 Pro (64-bit)

Minimum Specifications:

- CPU: Intel Core i7 (10th Gen or later) or AMD Ryzen 7 series

- GPU: NVIDIA GeForce RTX 3060 or equivalent with at least 12 GB VRAM

- RAM: 32 GB DDR4

- Storage: 512 GB NVMe SSD

- Operating System: Windows 10 (64-bit)

Note: While PartCrafter can run on systems with lower specifications, performance may be significantly reduced, especially during training or inference of large models.

🛠️ Software Requirements

Core Dependencies:

- Operating System: Windows 10/11 (64-bit)

- Python: 3.8 or higher

- CUDA Toolkit: Compatible version for your GPU (e.g., CUDA 11.3 for RTX 30 series)

- cuDNN: Version compatible with your CUDA Toolkit

- PyTorch: Latest stable version with GPU support

- Transformers Library: Hugging Face Transformers

- Additional Libraries: NumPy, SciPy, OpenCV, Matplotlib, etc.

Development Tools:

- Integrated Development Environment (IDE): Visual Studio Code or PyCharm

- Version Control: Git

- Containerization (Optional): Docker for environment consistency

✅ Best Practices for Optimal Performance

- GPU Utilization: Ensure that your GPU drivers are up to date to leverage the full capabilities of your hardware.

- Power Supply: Use a high-wattage, reliable PSU (e.g., 1000W or higher) to support power-hungry components.

- Cooling Solutions: Implement efficient cooling systems (e.g., liquid cooling) to maintain optimal operating temperatures during intensive tasks.

- Storage Configuration: Consider using multiple SSDs—one for the operating system and applications, and another dedicated to data storage—to enhance read/write speeds.

- Regular Maintenance: Perform regular system maintenance, including cleaning dust from components and monitoring system performance, to ensure longevity and reliability.

🛠️ PartCrafter Installation Guide

1. Clone the Repository

Begin by cloning the official PartCrafter repository:

git clone https://github.com/wgsxm/PartCrafter.git

cd PartCrafter

2. Set Up a Python Virtual Environment

It’s recommended to use a virtual environment to manage dependencies:

python -m venv partcrafter-env

source partcrafter-env/bin/activate # On Windows, use `partcrafter-env\Scripts\activate`

3. Install Required Dependencies

Ensure you have the necessary dependencies:

pip install -r requirements.txt

4. Download Pre-trained Models

PartCrafter requires pre-trained models to function correctly. Download them from the official repository or the provided links and place them in the appropriate directory, typically ./models/.

5. Run PartCrafter

After setting up, you can run PartCrafter with your desired input:

python generate_mesh.py --input your_image.png --output output.obj

Replace your_image.png with your input image and specify the desired output file name.

🧩 Troubleshooting Tips

If you encounter issues during installation or usage, consider the following:

1. CUDA Compatibility

Ensure that your CUDA version is compatible with your GPU and the installed PyTorch version. Mismatched versions can lead to runtime errors.

2. Missing Dependencies

If you receive errors about missing modules, verify that all dependencies are installed:

pip install -r requirements.txt

Additionally, check if any system-specific dependencies need to be installed.

3. Model Download Issues

If PartCrafter cannot automatically download pre-trained models:

- Manually download the models from the official source.

- Place them in the

./models/directory. - Ensure the model files are not corrupted and are in the correct format.

4. Performance Optimization

For optimal performance:

- Use a machine with a high-end NVIDIA GPU (e.g., RTX 3090 or higher).

- Ensure that your system has sufficient RAM (at least 16GB recommended).

- Close unnecessary applications to free up system resources.

🔧 Additional Resources

For more detailed information and updates:

- Official PartCrafter GitHub Repository: https://github.com/wgsxm/PartCrafter

- Research Paper: PartCrafter: Structured 3D Mesh Generation via Compositional Latent Diffusion Transformers

🧩 Conclusion: The Future of 3D Mesh Generation with PartCrafter

PartCrafter represents a significant leap forward in the realm of 3D mesh generation. By introducing a unified, compositional generation architecture, it overcomes the limitations of traditional methods that often rely on monolithic shapes or two-stage pipelines involving image segmentation followed by reconstruction. Instead, PartCrafter directly synthesizes semantically meaningful and geometrically distinct 3D parts from a single RGB image, enabling the creation of complex multi-object scenes without the need for pre-segmented inputs .

Central to PartCrafter’s innovation is its compositional latent space, where each 3D part is represented by a set of disentangled latent tokens. This design allows for structured information flow both within individual parts and across all parts, ensuring global coherence while preserving part-level detail during generation. The hierarchical attention mechanism further enhances this by enabling structured information flow, ensuring that the generated meshes are both accurate and detailed.

The curated dataset, mined from large-scale 3D object datasets, provides part-level supervision, which is crucial for training models that can generate decomposable 3D meshes. Experiments have shown that PartCrafter outperforms existing approaches in generating decomposable 3D meshes, including parts that are not directly visible in input images. This demonstrates the strength of part-aware generative priors for 3D understanding and synthesis .

In comparison to other models, such as MeshCraft and StructLDM, which focus on specific aspects like mesh refinement and human generation respectively, PartCrafter offers a more generalized approach to 3D mesh generation. While MeshCraft emphasizes high-fidelity mesh generation at faster speeds, and StructLDM focuses on structured latent spaces for 3D human modeling, PartCrafter provides a unified framework that can generate complex 3D scenes from a single image input, making it a versatile tool for various applications .

Looking ahead, the advancements introduced by PartCrafter pave the way for more sophisticated 3D generation models. The integration of compositional latent spaces and hierarchical attention mechanisms sets a new standard for how 3D meshes can be generated, offering greater flexibility and detail. As research in this field continues to evolve, we can anticipate even more innovative approaches that will further enhance the capabilities of 3D mesh generation models.

🔮 Future Prospects of PartCrafter

PartCrafter has set a new benchmark in 3D mesh generation by introducing a unified, compositional generation architecture that synthesizes semantically meaningful and geometrically distinct 3D meshes from a single RGB image. Its innovative approach, leveraging a compositional latent space and hierarchical attention mechanism, has demonstrated superior performance in generating decomposable 3D meshes, including parts not directly visible in input images.

Looking ahead, several avenues could further enhance PartCrafter’s capabilities:

- Integration with Text-to-3D Models: Combining PartCrafter’s structural generation with text-to-3D models could enable the creation of 3D meshes based on textual descriptions, expanding its applicability in fields like virtual reality and gaming.simplescience.ai

- Real-Time Generation: Optimizing PartCrafter for real-time 3D mesh generation could make it more practical for interactive applications, such as virtual prototyping and augmented reality.

- Cross-Domain Adaptability: Enhancing PartCrafter’s ability to generate 3D meshes across diverse domains, including fashion and architecture, could broaden its utility in various industries.

While specific numerical scores for PartCrafter’s future performance are not available, its innovative approach positions it as a leading model in the field of 3D mesh generation.

📚 References

- Lin, Y., Lin, C., Pan, P., Yan, H., Feng, Y., Mu, Y., & Fragkiadaki, K. (2025). PartCrafter: Structured 3D Mesh Generation via Compositional Latent Diffusion Transformers. arXiv. https://arxiv.org/abs/2506.05573arxiv.org

- He, X., Chen, J., Huang, D., Liu, Z., Huang, X., Ouyang, W., Yuan, C., & Li, Y. (2025). MeshCraft: Exploring Efficient and Controllable Mesh Generation with Flow-based DiTs. arXiv. https://arxiv.org/abs/2503.23022arxiv.org

- Gao, D., Siddiqui, Y., Li, L., & Dai, A. (2024). MeshArt: Generating Articulated Meshes with Structure-guided Transformers. arXiv. https://arxiv.org/abs/2412.11596arxiv.org

- Qian, G., Mai, J., Hamdi, A., Ren, J., Siarohin, A., Li, B., Lee, H.-Y., Skorokhodov, I., Wonka, P., Tulyakov, S., & Ghanem, B. (2023). Magic123: One Image to High-Quality 3D Object Generation Using Both 2D and 3D Diffusion Priors. arXiv. https://arxiv.org/abs/2306.17843arxiv.org

- Li, W., Liu, J., Chen, R., Liang, Y., Chen, X., Tan, P., & Long, X. (2024). CraftsMan: High-fidelity Mesh Generation with 3D Native Generation and Interactive Geometry Refiner. arXiv. https://arxiv.org/abs/2405.14979arxiv.org

- Mihaylova, T., & Martins, A. F. T. (2019). Scheduled Sampling for Transformers. arXiv. https://arxiv.org/abs/1903.07412arxiv.org

- Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., & Ng, R. (2020). NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv. https://arxiv.org/abs/2003.08934arxiv.org

- Mo, K., Guerrero, P., Yi, L., Su, H., Wonka, P., Mitra, N., & Guibas, L. (2019). StructureNet: Hierarchical Graph Networks for 3D Shape Generation. ACM Transactions on Graphics. https://doi.org/10.1145/3306346.3323025arxiv.org

- Nash, C., Ganin, Y., Eslami, S. M. A., & Battaglia, P. W. (2020). PolyGen: An Autoregressive Generative Model of 3D Meshes. arXiv. https://arxiv.org/abs/2003.11503arxiv.org

- Mittal, P., Cheng, Y.-C., Singh, M., & Tulsiani, S. (2022). AutoSDF: Shape Priors for 3D Completion, Reconstruction and Generation. arXiv. https://arxiv.org/abs/2203.06189arxiv.org