🎭 Pixel3DMM: Redefining 3D Face Reconstruction from a Single Image with Smart Screen-Space Priors

In the realm of computer vision, reconstructing a 3D face model from a single 2D image has long been a formidable challenge. Traditional methods often struggle with issues like occlusions, varying lighting conditions, and diverse facial expressions. However, a groundbreaking approach known as Pixel3DMM has emerged, offering a significant leap forward in this domain.

🔍 What is Pixel3DMM?

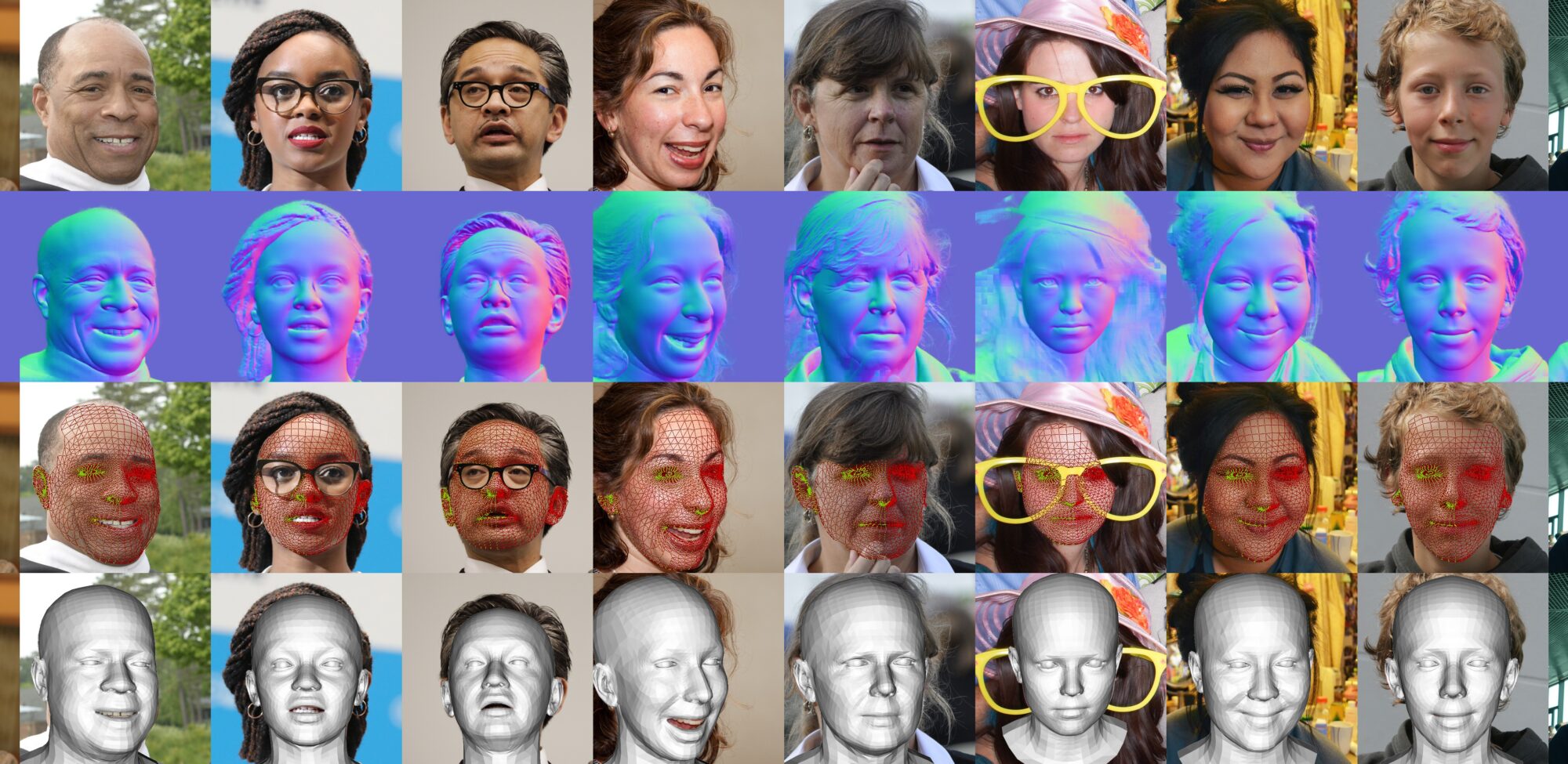

Pixel3DMM stands for Pixel-aligned 3D Morphable Model, a novel framework introduced by researchers from the Technical University of Munich, Synthesia, and University College London. This method leverages advanced vision transformers to predict per-pixel geometric cues—specifically, surface normals and UV coordinates—from a single RGB image. These predictions serve as precise constraints for optimizing a 3D Morphable Face Model (3DMM), enabling the generation of highly accurate 3D face reconstructions

🧩 Core Innovations

- Vision Transformer Backbone: Pixel3DMM utilizes a fine-tuned DINO ViT (Vision Transformer) as its backbone. This architecture excels at capturing intricate spatial relationships within images, making it ideal for tasks requiring detailed geometric understanding.

- Per-Pixel Geometric Prediction: The model introduces specialized prediction heads that output surface normals and UV coordinates for each pixel. These detailed cues are crucial for accurately mapping 2D images to 3D structures.

- FLAME Model Optimization: At the core of the reconstruction process is the FLAME (Faces Learned with an Articulated Model and Expressions) model. Pixel3DMM employs a fitting optimization technique that adjusts FLAME parameters based on the predicted geometric cues, resulting in a precise 3D face model .

📊 Benchmarking and Performance

To evaluate the efficacy of Pixel3DMM, the researchers introduced a comprehensive benchmark featuring a diverse set of facial expressions, viewing angles, and ethnicities. Notably, this benchmark is the first to assess both posed and neutral facial geometries. The results were compelling: Pixel3DMM outperformed existing state-of-the-art methods by over 15% in geometric accuracy, particularly in handling posed facial expressions .

🌐 Real-World Applications

The implications of Pixel3DMM extend far beyond academic research. Its ability to generate accurate 3D face models from a single image opens up numerous possibilities in various fields:

- Virtual Reality (VR) and Augmented Reality (AR): Enhancing user experiences with realistic avatars and interactions.

- Digital Entertainment: Creating lifelike characters for films and video games.link.springer.com

- Healthcare: Assisting in facial surgery planning and rehabilitation by providing detailed 3D models.

- Security: Improving biometric authentication systems through accurate facial recognition.

🧠 Pixel3DMM vs. the Competition: A Comparative Overview

In the realm of 3D face reconstruction from a single image, several models have emerged, each with its unique approach and strengths. Below is a detailed comparison highlighting how Pixel3DMM stands out among its peers:

| Model | Input | Key Features | Strengths | Weaknesses |

|---|---|---|---|---|

| Pixel3DMM | Single RGB Image | Utilizes DINO ViT for per-pixel surface normal and UV-coordinate prediction. Optimizes FLAME model using these cues. | Achieves over 15% improvement in geometric accuracy for posed facial expressions. Handles diverse facial expressions and ethnicities effectively. | Requires substantial computational resources for training and inference. |

| DECA | Single RGB Image | Employs a deep convolutional network to regress 3DMM parameters directly from images. | High accuracy in neutral face reconstruction. Efficient for real-time applications. | Struggles with posed expressions and occlusions. |

| Deep3DFace | Single RGB Image | Combines CNNs with 3DMM fitting for face shape and texture estimation. | Robust to various poses and lighting conditions. | May produce less detailed textures compared to other models. |

| 3DDFA-V2 | Single RGB Image | Leverages deep learning for dense 3D face alignment. | Fast and efficient, suitable for real-time applications. | Limited ability to handle extreme facial expressions. |

| AvatarMe | Single RGB Image | Generates high-resolution 3D faces with diffuse and specular components. | Produces photorealistic 3D faces, bridging the uncanny valley. | Requires high-resolution input images and significant computational power. |

🌟 Advantages of Pixel3DMM Over Other 3D Face Reconstruction Models

Pixel3DMM introduces several innovations that enhance the accuracy and versatility of 3D face reconstruction from a single image. Here’s how it compares to other models:

1. Enhanced Geometric Accuracy

Pixel3DMM outperforms existing models by over 15% in geometric accuracy, particularly for posed facial expressions. This improvement is achieved through its unique approach of predicting per-pixel surface normals and UV coordinates, which provide detailed geometric cues for FLAME model optimization.

2. Comprehensive Benchmarking

The model is evaluated on a new benchmark that includes a diverse set of facial expressions, viewing angles, and ethnicities. This comprehensive evaluation ensures that Pixel3DMM performs well across a wide range of real-world scenarios, unlike some models that may excel only under specific conditions.

3. Robustness to Diverse Facial Expressions

While models like DECA and Deep3DFace perform well with neutral faces, Pixel3DMM demonstrates superior performance with both neutral and posed facial expressions. This robustness is crucial for applications requiring accurate representation of dynamic facial movements.

4. Advanced Training Methodology

Pixel3DMM employs a training strategy that includes data augmentation techniques such as varying lighting conditions and material parameters. This approach enhances the model’s ability to generalize across different environments, improving its performance in real-world applications.

5. State-of-the-Art Surface Normal Estimation

The model achieves state-of-the-art performance in surface normal estimation, which is critical for accurate 3D face reconstruction. This capability allows for more precise fitting of the FLAME model to the input image.

🖥️ Software and Hardware Requirements for Pixel3DMM

Minimum System Requirements

| Component | Specification |

|---|---|

| Operating System | Windows 10 or 11 (64-bit), macOS 11 (Big Sur) or newer |

| Processor (CPU) | Intel Core i5 (4 cores) or AMD Ryzen 5 (4 cores) or better |

| Memory (RAM) | 16 GB or more |

| Graphics (GPU) | NVIDIA GeForce GTX 1060 / RTX 2060 or AMD equivalent supporting CUDA/OpenCL |

| Storage | SSD with at least 100 GB free space |

| Python Version | Python 3.8 or newer |

| CUDA Version | CUDA 11.3 or newer (for NVIDIA GPUs) |

| Dependencies | PyTorch 1.10+, NumPy, OpenCV, DINO ViT model files |

Recommended System Specifications

| Component | Specification |

|---|---|

| Operating System | Windows 10 or 11 (64-bit), macOS 13 (Ventura) or newer |

| Processor (CPU) | Intel Core i7 or i9 (8 cores) or AMD Ryzen 7 or 9 (8 cores) or better |

| Memory (RAM) | 32 GB or more |

| Graphics (GPU) | NVIDIA GeForce RTX 3070 or better with CUDA support |

| Storage | NVMe SSD with at least 250 GB free space |

| Python Version | Python 3.9 or newer |

| CUDA Version | CUDA 11.6 or newer (for NVIDIA GPUs) |

| Dependencies | PyTorch 1.12+, NumPy, OpenCV, DINO ViT model files |

Additional Notes

- GPU Acceleration: For optimal performance, especially during training and inference phases, a CUDA-capable NVIDIA GPU is highly recommended. AMD GPUs may not be compatible with all CUDA-dependent operations.

- Python Environment: It’s advisable to use a virtual environment (e.g.,

venvorconda) to manage dependencies and avoid conflicts. - Model Files: Ensure that the DINO ViT model files are correctly downloaded and placed in the appropriate directories as per the Pixel3DMM documentation.

- Operating System Compatibility: While the software is compatible with both Windows and macOS, certain features may perform better on Windows due to more mature GPU support.

🛠️ Installation Steps for Pixel3DMM

1. Clone the Repository

Begin by cloning the official Pixel3DMM repository from GitHub:

git clone https://github.com/SimonGiebenhain/pixel3dmm.git

cd pixel3dmm

2. Set Up a Conda Environment

Create and activate a new Conda environment to manage dependencies:

conda create -n pixel3dmm python=3.8

conda activate pixel3dmm

3. Install Dependencies

Install the required Python packages using pip:

pip install -r requirements.txt

Alternatively, if you prefer using conda for package management, you can use the provided environment.yml file:

conda env create -f environment.yml

conda activate pixel3dmm

4. Download Pre-trained Models

For optimal performance, download the pre-trained DINO ViT model files. These models are essential for the per-pixel geometric cue predictions. You can obtain them from the official repository or the associated project page.

5. Run the Example Script

To test the installation and see the model in action, run the provided example script:

python scripts/example.py

Ensure that you have an input image ready for processing.

⚠️ Troubleshooting Tips

- CUDA Compatibility: Ensure that your system has a CUDA-compatible NVIDIA GPU and the appropriate CUDA toolkit installed for GPU acceleration.

- Python Version: The recommended Python version is 3.8. Using a different version may lead to compatibility issues.

- Dependency Conflicts: If you encounter issues with package versions, consider creating a fresh Conda environment and reinstalling the dependencies.

- Model Files: Ensure that the DINO ViT model files are correctly placed in the specified directories as per the repository’s documentation.

For more detailed information and updates, refer to the official Pixel3DMM repository: https://github.com/SimonGiebenhain/pixel3dmm

🧠 Conclusion

Pixel3DMM represents a significant advancement in the field of 3D face reconstruction from a single RGB image. By leveraging the DINO Vision Transformer (ViT) to predict per-pixel surface normals and UV coordinates, it provides rich geometric cues that enhance the optimization of the FLAME 3D Morphable Model (3DMM). This approach leads to over a 15% improvement in geometric accuracy, particularly for posed facial expressions, compared to existing methods.

The introduction of a new benchmark dataset, encompassing a diverse range of facial expressions, viewing angles, and ethnicities, sets a new standard for evaluating single-image 3D face reconstruction models. This comprehensive evaluation ensures that Pixel3DMM performs robustly across various real-world scenarios.

In summary, Pixel3DMM not only advances the state-of-the-art in 3D face reconstruction but also provides a versatile and efficient framework applicable to a wide array of applications, from virtual reality and gaming to biometric authentication and digital avatars.

📚 Primary Reference

- Giebenhain, S., Kirschstein, T., Rünz, M., Agapito, L., & Nießner, M. (2025). Pixel3DMM: Versatile Screen-Space Priors for Single-Image 3D Face Reconstruction. arXiv.

🔗 Additional Resources

- Giebenhain, S. (2025). Pixel3DMM: Versatile Screen-Space Priors for Single-Image 3D Face Reconstruction. Official Project Page.

- TheMoonlight.io (2025). Literature Review: Pixel3DMM.

🧠 Related Works

- Yu, A., Ye, V., Tancik, M., & Kanazawa, A. (2020). pixelNeRF: Neural Radiance Fields from One or Few Images. arXiv.

- Zheng, Z., Yu, T., Wei, Y., Dai, Q., & Liu, Y. (2019). DeepHuman: 3D Human Reconstruction from a Single Image. arXiv.

- Jiang, L., Zhang, J., Deng, B., Li, H., & Liu, L. (2017). 3D Face Reconstruction with Geometry Details from a Single Image. arXiv.