🧠🖼️ Reimagining Image Editing: How ByteDance’s BEGEL Uses AI Reasoning to Transform Photos

Introduction:

In the ever-evolving world of AI, image editing has leaped far beyond filters and touch-ups. Enter BEGEL—ByteDance’s groundbreaking multimodal AI model that doesn’t just edit images; it understands them. Unlike traditional tools, BEGEL brings the power of reasoning to the creative process, enabling users to make intelligent edits that align with context, intent, and visual logic. Whether you want to swap out skies, adjust perspectives, or modify scenes based on textual prompts, BEGEL’s fusion of vision and language capabilities makes it feel less like using a tool and more like collaborating with a smart assistant. In this post, we’ll dive into how BEGEL works, what sets it apart, and what it means for the future of intelligent image editing.

🚀 What is BEGEL?

BEGEL is ByteDance’s latest leap into the future of AI-powered creativity. Short for “Bi-directional Encoder-Generator Enhanced Learner” (as referenced in recent research), BEGEL is not just another image editing tool—it’s a multimodal AI model that combines visual understanding with natural language reasoning. In simpler terms, BEGEL can look at an image, understand its content, listen to what you want done (in plain language), and then make smart, context-aware changes—just like a human editor would.

What makes BEGEL truly groundbreaking is its ability to go beyond surface-level commands. Traditional AI tools might respond to prompts like “make the sky blue” or “add a cat,” but BEGEL understands why you might want those edits—and can make suggestions or adjustments accordingly. This means it can handle complex, layered instructions like:

- “Change the lighting to make it feel like sunset.”

- “Remove the man in the background but keep the shadow.”

- “Make the scene look like it’s in a post-apocalyptic future.”

At its core, BEGEL represents the convergence of computer vision and language models, allowing it to reason about spatial, aesthetic, and even emotional aspects of an image. It’s not just mimicking editing steps—it’s making intelligent decisions along the way.

This positions BEGEL as a new kind of AI assistant: one that understands both what you see and what you mean.

🧠🔍 How BEGEL Works: Vision Meets Language

At the heart of BEGEL lies a sophisticated architecture that seamlessly integrates visual perception with natural language understanding. Unlike traditional models that process images and text separately, BEGEL employs a unified, decoder-only framework trained on vast multimodal datasets comprising text, images, video, and web data. This design enables BEGEL to perform complex multimodal reasoning tasks, such as free-form image manipulation, future frame prediction, 3D manipulation, and world navigation .

Key Components of BEGEL’s Architecture:

- Multimodal Pretraining: BEGEL is pretrained on trillions of tokens curated from large-scale interleaved text, image, video, and web data. This extensive training allows the model to understand and generate content across different modalities, making it adept at interpreting and editing images based on textual descriptions.

- Unified Decoder-Only Model: The model utilizes a decoder-only architecture that processes both visual and textual inputs together, facilitating a more cohesive understanding of the content and context. This unified approach enhances BEGEL’s ability to perform tasks that require reasoning across modalities.

- Emerging Multimodal Reasoning Capabilities: BEGEL exhibits advanced multimodal reasoning abilities, enabling it to perform complex tasks that involve understanding and generating content across different modalities. These capabilities include free-form image manipulation, future frame prediction, 3D manipulation, and world navigation .

How It Powers Image Editing:

When you provide BEGEL with an image and a textual prompt, the model analyzes both inputs simultaneously. It interprets the content of the image and the intent behind the text, allowing it to make intelligent edits that align with your instructions. For example, if you ask BEGEL to “replace the cloudy sky with a sunset,” it understands the visual elements involved and the desired outcome, resulting in a seamless and contextually appropriate edit.

This integrated approach marks a significant advancement in AI-driven image editing, moving beyond simple pixel manipulation to a more intuitive and reasoning-based process.

✨ Key Features That Set BEGEL Apart

BEGEL isn’t just another AI image editor—it’s a leap forward in how machines understand and transform visual content. Here’s what makes BEGEL stand out:

1. Natural Language Understanding

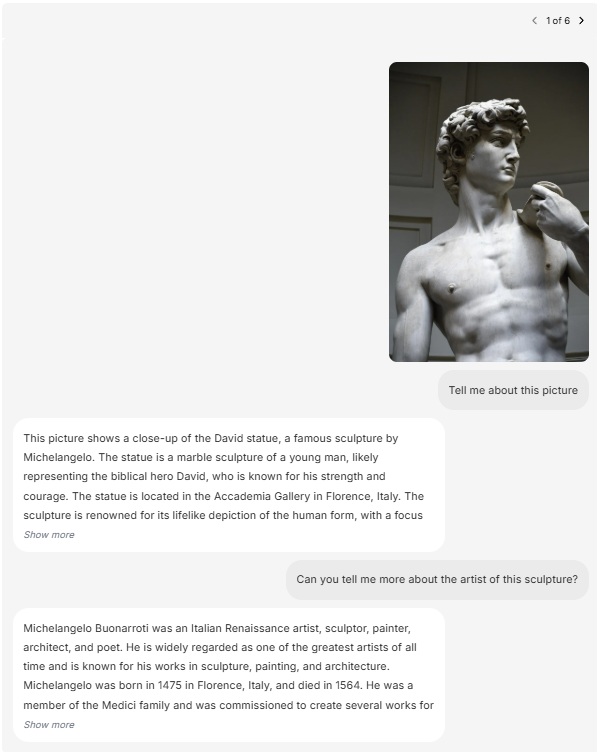

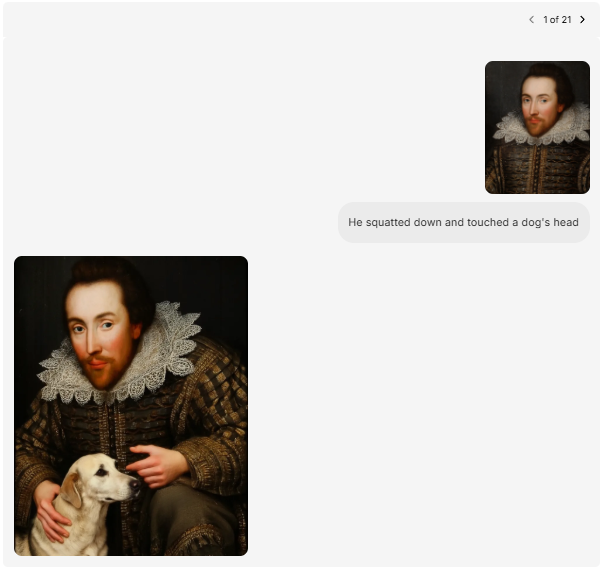

BEGEL excels at interpreting complex prompts in multiple languages, including idioms and specialized terminology. Whether you say “replace the watermelon with grapes” or “turn the sky into a sunset,” BEGEL grasps the intent and delivers accordingly .

2. Context-Aware Editing

Unlike traditional tools that require manual selection, BEGEL can intelligently identify and modify specific elements within an image. For instance, instructing it to “remove glass cracks” results in precise edits without affecting the rest of the image .

3. Multi-Round Editing

BEGEL supports iterative editing, allowing users to make multiple adjustments over time while maintaining image quality. This feature is particularly useful for complex edits that require refinement .

4. High-Quality Output

The model ensures that edits blend seamlessly with the original image, preserving details and avoiding unnatural artifacts. This results in high-quality, realistic images that meet user expectations .

5. Versatile Editing Capabilities

From changing backgrounds and adding or removing objects to applying style transfers, BEGEL offers a wide range of editing options. Users can transform images with simple text commands, making it accessible for both professionals and casual users .

🖌️ Use Cases: From Creators to Developers

BEGEL’s innovative capabilities open up a wide range of possibilities for various users:

🎨 For Creators and Designers

- Enhanced Creativity: BEGEL allows creators to transform their ideas into reality by simply describing them in natural language. Whether it’s altering an image’s background or adding new elements, BEGEL makes the creative process more intuitive and efficient.

- Rapid Prototyping: Designers can quickly generate and modify visuals, enabling faster iteration and refinement of concepts.

👩💻 For Developers and Researchers

- Integration into Applications: Developers can integrate BEGEL’s functionalities into their applications, providing users with advanced image editing capabilities powered by AI.

- Research and Experimentation: Researchers can utilize BEGEL to explore new AI techniques and applications in image processing and multimodal understanding.

🌐 Open-Source Community

- Collaborative Development: As an open-source project, BEGEL invites contributions from the global developer community. This collaborative approach accelerates innovation and the development of new features.

- Customization and Extension: Users can modify and extend BEGEL’s capabilities to suit their specific needs, fostering a diverse ecosystem of applications.

🚀 How to Access or Try BEGEL

ByteDance’s BEGEL is an open-source multimodal AI model designed for advanced image editing and understanding. While the model’s full capabilities are accessible to developers and researchers, there are several ways for enthusiasts and creators to experience BEGEL’s features.

🖥️ Run BEGEL Locally

For those with technical expertise, BEGEL can be run locally using Docker. This setup allows for full control over the model’s execution and customization. Detailed instructions and the necessary code are available on the Github(click to look ), which hosts the model’s API and provides a playground for experimentation.

🧱 Requirements to Run globally

to setup you should primary have :

- You should have Microsoft C++ Build tools (click here to download) and download the C++ developer tool kit

- you should also have Anaconda Installed (click here to install ) after install restart

- Now Create a File you want to setup a Model (like AI_file)

- In CMD change the path and navigate to the file AI_file

- Then run the following commands in cmd

- git clone https://github.com/bytedance-seed/BAGEL.git

- cd BAGEL

- conda create -n bagel python=3.10 -y

- conda activate bagel

- pip install -r requirements.txt

- Then install pretrain model

- from huggingface_hub import snapshot_download

- save_dir = “/path/to/save/BAGEL-7B-MoT”

- repo_id = “ByteDance-Seed/BAGEL-7B-MoT”

- cache_dir = save_dir + “/cache”

- snapshot_download(cache_dir=cache_dir,

- local_dir=save_dir,

- repo_id=repo_id,

- local_dir_use_symlinks=False,

- resume_download=True,

- allow_patterns=[“.json”, “.safetensors”, “.bin”, “.py”, “.md”, “.txt”],

- ) or download from here

- Now run

- pip install gradio

- python app.py

🔮 The Future of Reasoning-Based Image AI

The emergence of BEGEL marks a significant milestone in the evolution of AI-driven image editing. As AI models transition from mere pattern recognition to genuine reasoning, the potential applications expand beyond traditional boundaries.

🧠 Advancements in AI Reasoning

ByteDance’s commitment to enhancing AI reasoning is evident in its development of models like Seed-Thinking-v1.5. This model employs a Mixture-of-Experts (MoE) architecture, activating only a subset of its 200 billion parameters at a time, making it both efficient and powerful. Seed-Thinking-v1.5 has demonstrated superior performance on various benchmarks, including achieving an 86.7% score on the AIME 2024 exam, surpassing previous models like DeepSeek R1 .DNyuz+4VentureBeat+4Seed+4Seed+3DNyuz+3VentureBeat+3DNyuz+4Seed+4arXiv+4

These advancements suggest a future where AI not only edits images but understands context, intent, and nuance, leading to more intuitive and creative outputs.

🌐 Open-Source Collaboration

ByteDance’s decision to open-source models like BEGEL fosters a collaborative environment, enabling developers and researchers worldwide to contribute to and benefit from these technologies. This openness accelerates innovation, leading to more refined and versatile AI tools that can be tailored to diverse needs and applications.

🚀 Broader Implications

The integration of reasoning capabilities into image AI models has far-reaching implications:

- Enhanced Creativity: Artists and designers can leverage AI to explore new creative possibilities, pushing the boundaries of visual storytelling.

- Educational Tools: AI can assist in creating interactive learning materials, making complex concepts more accessible through visual representations.

- Accessibility: Individuals with disabilities can benefit from AI tools that interpret and modify images to suit their needs, enhancing digital accessibility.

As AI continues to evolve, the fusion of reasoning and image editing will likely redefine our interaction with digital content, making it more personalized, intuitive, and aligned with human creativity.

📌 Final Thoughts

ByteDance’s BEGEL (Bi-directional Encoder-Generator Enhanced Learner) represents a significant advancement in AI-driven image editing. By integrating reasoning capabilities with image manipulation, BEGEL offers a more intuitive and context-aware approach to editing, setting it apart from traditional tools.

The open-source nature of BEGEL encourages collaboration and innovation within the AI community, allowing developers and researchers to explore and build upon its capabilities. This openness fosters a diverse ecosystem of applications, from creative content generation to educational tools, all powered by advanced multimodal reasoning.

As AI continues to evolve, models like BEGEL pave the way for more intelligent and adaptable systems that can understand and interpret human intent with greater accuracy. The future of image editing is not just about modifying pixels but about creating meaningful transformations that align with the user’s vision.