🧰 LoRA-Edit: Controllable First-Frame-Guided Video Editing via Mask-Aware LoRA Fine-Tuning

📚Introduction:

Video editing using diffusion models has achieved remarkable results in generating high-quality edits for videos. However, current methods often rely on large-scale pretraining, limiting flexibility for specific edits. First-frame-guided editing provides control over the first frame, but lacks flexibility over subsequent frames. To address this, we propose a mask-based LoRA (Low-Rank Adaptation) tuning method that adapts pretrained Image-to-Video (I2V) models for flexible video editing. Our approach preserves background regions while enabling controllable edits propagation. This solution offers efficient and adaptable video editing without altering the model architecture.

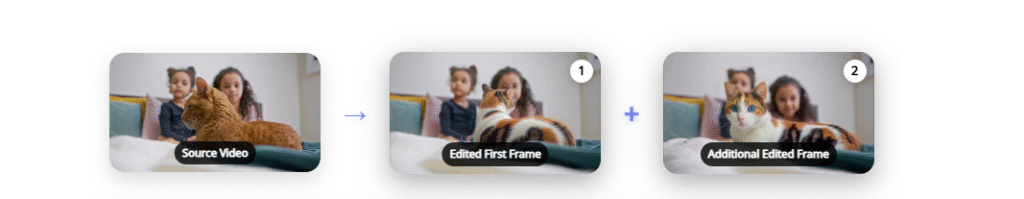

To better steer this process, we incorporate additional references, such as alternate viewpoints or representative scene states, which serve as visual anchors for how content should unfold. We address the control challenge using a mask-driven LoRA tuning strategy that adapts a pre-trained image-to-video model to the editing context. The model must learn from two distinct sources: the input video provides spatial structure and motion cues, while reference images offer appearance guidance. A spatial mask enables region-specific learning by dynamically modulating what the model attends to, ensuring that each area draws from the appropriate source. Experimental results show our method achieves superior video editing performance compared to state-of-the-art methods.

Introduction to LoRA-Edit: Controllable First-Frame-Guided Video Editing via Mask-Aware LoRA Fine-Tuning

LoRA-Edit is an advanced video editing framework that leverages Low-Rank Adaptation (LoRA) fine-tuning to enable controllable, first-frame-guided video editing. Developed by researchers from The Chinese University of Hong Kong and SenseTime Research, LoRA-Edit addresses key limitations in existing video editing models, such as the reliance on large-scale pretraining and the lack of flexibility in editing beyond the first frame. By utilizing mask-aware LoRA fine-tuning, LoRA-Edit adapts pretrained Image-to-Video (I2V) models for flexible video editing, preserving background regions while enabling controllable edits propagation.

What Is LoRA-Edit?

LoRA-Edit is a state-of-the-art video editing model that transforms input videos into edited sequences with high-quality, consistent changes. Unlike traditional methods that require extensive manual editing or retraining of models, LoRA-Edit automates the editing process through efficient fine-tuning techniques. The core innovation lies in its mask-aware LoRA fine-tuning approach, which allows for precise control over which parts of the video are edited and how changes propagate throughout the sequence.

Key Features Include:

- Mask-Aware LoRA Fine-Tuning: Utilizes spatial masks to guide the model’s attention, enabling region-specific edits and preserving unedited areas.

- First-Frame-Guided Editing: Allows users to specify edits in the first frame, with changes automatically propagated throughout the video.

- Reference Image Integration: Incorporates additional reference images to provide appearance guidance, enhancing the quality and consistency of edits.

- Efficient Adaptation: Adapts pretrained I2V models without the need for extensive retraining, reducing computational costs and time.

Who Can Benefit from LoRA-Edit?

- Video Editors: Streamline the editing process with automated, high-quality results.

- Content Creators: Enhance videos with consistent and controlled edits, saving time and effort.

- Researchers and Developers: Explore and build upon an open-source framework for video editing applications.

Technologies Powering LoRA-Edit

- Low-Rank Adaptation (LoRA): A technique for efficiently fine-tuning large models by adding low-rank matrices, reducing the number of trainable parameters.

- Image-to-Video Diffusion Models: Generative models that produce high-quality video sequences from input images.

- Spatial Masking: A method to specify which regions of the video should be edited, guiding the model’s attention during the editing process.

Why Does LoRA-Edit Matter?

LoRA-Edit democratizes high-quality video editing by providing an accessible, efficient, and flexible solution. Its ability to adapt pretrained models for specific editing tasks without extensive retraining makes it a valuable tool for a wide range of users, from professional video editors to hobbyists and researchers. By preserving background regions and enabling precise control over edits, LoRA-Edit ensures that video content maintains its integrity while allowing for creative modifications.

🧠 Architecture

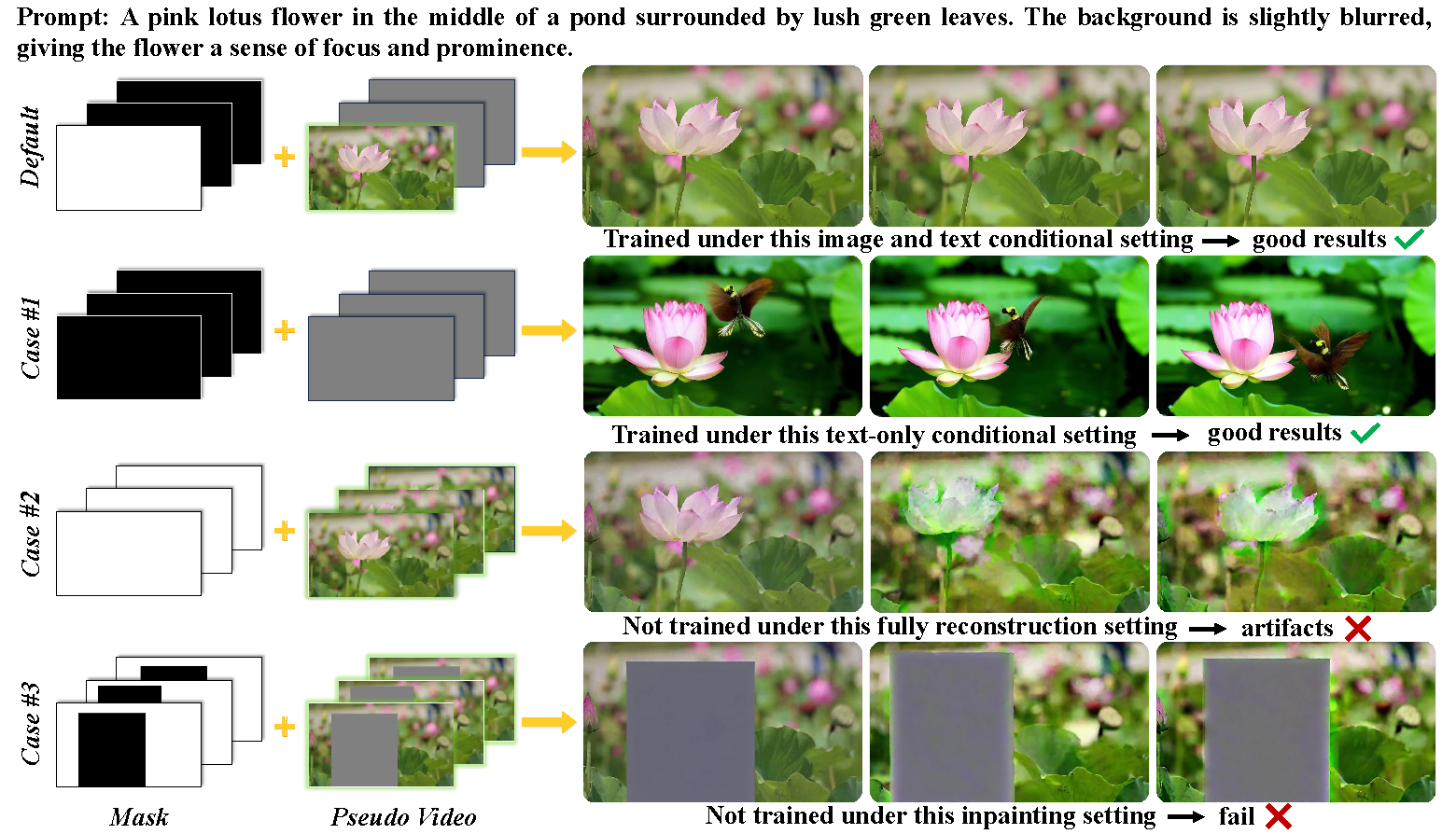

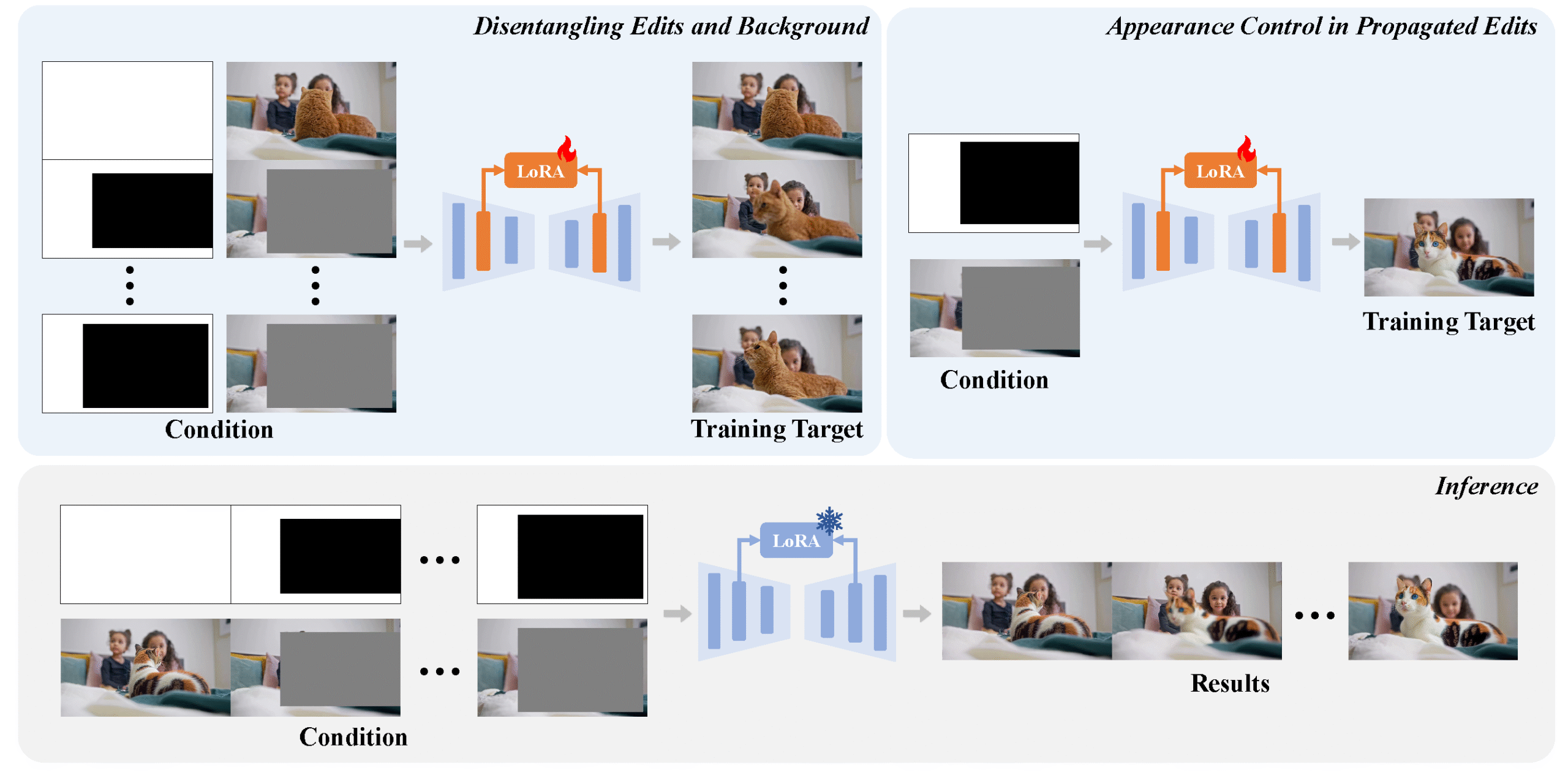

LoRA-Edit introduces a novel approach to video editing by leveraging Low-Rank Adaptation (LoRA) fine-tuning on pretrained Image-to-Video (I2V) diffusion models. The key components of its architecture include:

- Pretrained I2V Model: Utilizes a pretrained I2V model as the base, which is adapted using LoRA modules.

- Mask-Aware LoRA Fine-Tuning: Applies LoRA fine-tuning with spatial masks to control which regions of the video are edited, preserving background areas while modifying foreground elements.

- First-Frame Guidance: Employs the first frame of the video as a reference to guide the editing process throughout the sequence.

- Additional Reference Frames: Incorporates additional edited frames to enhance the appearance consistency across the video.

- Training Strategy: The model is trained using a two-phase approach: first, to disentangle edits from the background, and second, to refine the appearance of edited regions.

🔬 Comparison with Other Models

| Model | Editing Guidance | Background Preservation | Appearance Consistency | Notable Features |

|---|---|---|---|---|

| LoRA-Edit | First-frame + Mask | ✅ High | ✅ High | Mask-aware LoRA fine-tuning |

| AnyV2V | First-frame | ❌ Moderate | ❌ Low | Direct frame-to-frame editing |

| Go-with-the-Flow | First-frame | ❌ Low | ❌ Low | Flow-based editing |

| I2VEdit | First-frame | ❌ Moderate | ❌ Moderate | Image-to-video diffusion model |

| VACE | Reference-guided | ✅ High | ✅ High | Video-to-video editing |

| Kling 1.6 | Reference-guided | ✅ High | ✅ High | Reference-based editing |

Note: ✅ indicates high performance, ❌ indicates lower performance.

📂 Open Source Details

LoRA-Edit is open-source and available on GitHub. The repository includes the following:

- Model Weights: Pretrained weights for the I2V model and LoRA modules.

- Training Code: Scripts for fine-tuning the model with mask-aware LoRA.

- Inference Code: Tools to apply the trained model for video editing tasks.

- Documentation: Guides on setup, usage, and customization.

The project is maintained by researchers from The Chinese University of Hong Kong and SenseTime Research. cjeen.github.io

💻 Minimum Hardware and Software Specifications

Hardware:

- GPU: NVIDIA RTX 3090 or equivalent with at least 24GB VRAM.

- CPU: Intel i7 or AMD Ryzen 7 series.

- RAM: 32GB or more.

- Storage: SSD with at least 100GB free space.

Software:

- Operating System: Linux (Ubuntu 20.04 or later) or Windows 10/11.

- Python: 3.8 or later.

- CUDA: 11.2 or later for GPU acceleration.

- Dependencies: PyTorch, NumPy, OpenCV, and other libraries as specified in the repository.

🚀 How to Install LoRA-Edit: Your Guide to Seamless Video Editing

LoRA-Edit is an innovative framework for controllable, first-frame-guided video editing via mask-aware LoRA fine-tuning. To get started, follow the steps below to set up LoRA-Edit on your system.

🛠️ Prerequisites

Before installing LoRA-Edit, ensure your system meets the following requirements:

- Operating System: Linux or macOS (Windows support may require additional configuration).

- Python Version: 3.8 or higher.

- CUDA Version: 11.3 or higher (for GPU support).

- Hardware: A GPU with at least 24GB VRAM is recommended for optimal performance.aimagichub.com

🔧 Installation Steps

- Clone the Repository Open your terminal and run the following command to clone the LoRA-Edit repository: bashCopyEdit

git clone https://github.com/cjeen/LoRAEdit.git

- Navigate to the Project Directory Change into the LoRA-Edit directory: bashCopyEdit

cd LoRAEdit

- Install Dependencies Install the required Python packages using pip: bashCopyEdit

pip install -r requirements.txt

- Set Up Environment Variables Configure the necessary environment variables. This may include setting paths for model weights and other resources. Refer to the repository’s documentation for specific environment variable configurations.

- Run the Application Start the LoRA-Edit application: bashCopyEdit

python app.py

📂 Folder Structure

After installation, your project directory should look like this:

plaintextCopyEditLoRAEdit/

├── app.py

├── requirements.txt

├── models/

│ └── pretrained_model.pth

├── assets/

│ └── sample_video.mp4

└── README.md

🧪 Testing the Installation

To verify that LoRA-Edit is working correctly, you can run a sample video through the application. Ensure that the sample video is placed in the assets/ directory and that the model weights are correctly configured. Refer to the repository’s documentation for detailed instructions on running tests and troubleshooting common issues.

🔗 Additional Resources

- GitHub Repository: https://github.com/cjeen/LoRAEdit

- Documentation: Detailed setup and usage instructions are available in the repository’s README.md file.

🔮 Future Work

While LoRA-Edit demonstrates significant advancements in controllable video editing, several avenues remain for further enhancement:

- Extended Video Lengths: Optimizing the model to handle longer video sequences without compromising quality.

- Real-Time Editing: Developing techniques to enable real-time video editing, facilitating interactive applications.

- Generalization Across Domains: Improving the model’s ability to generalize across diverse video content and styles.

- User-Friendly Interfaces: Creating intuitive interfaces that allow users to easily specify editing regions and reference images.

- Enhanced Temporal Consistency: Refining the model to maintain consistent edits across frames, especially in dynamic scenes.

📚 References

- Gao, C., Ding, L., Cai, X., Huang, Z., Wang, Z., & Xue, T. (2025). LoRA-Edit: Controllable First-Frame-Guided Video Editing via Mask-Aware LoRA Fine-Tuning. arXiv. Retrieved from

- Li, M., Lin, L., Liu, Y., Zhu, Y., & Li, Y. (2025). Qffusion: Controllable Portrait Video Editing via Quadrant-Grid Attention Learning. arXiv. Retrieved from

- Ma, Y., Cun, X., Liang, S., Xing, J., He, Y., Qi, C., Chen, S., & Chen, Q. (2023). MagicStick: Controllable Video Editing via Control Handle Transformations. arXiv. Retrieved from

- Huang, Z.-L., Liu, Y., Qin, C., Wang, Z., Zhou, D., Li, D., & Barsoum, E. (2025). Edit as You See: Image-guided Video Editing via Masked Motion Modeling. arXiv. Retrieved from

✅ Conclusion

LoRA-Edit represents a significant step forward in video editing technology, offering a flexible and efficient approach to first-frame-guided video editing. By leveraging mask-aware LoRA fine-tuning, it enables precise control over edits while preserving background regions, setting it apart from existing methods. As research continues, LoRA-Edit has the potential to become a cornerstone in the field of video editing, paving the way for more advanced and user-friendly editing tools.