🧠 From 2D to 3D: DreamCube’s Multi-plane Synchronization for Immersive Panoramas

🧠 Introduction

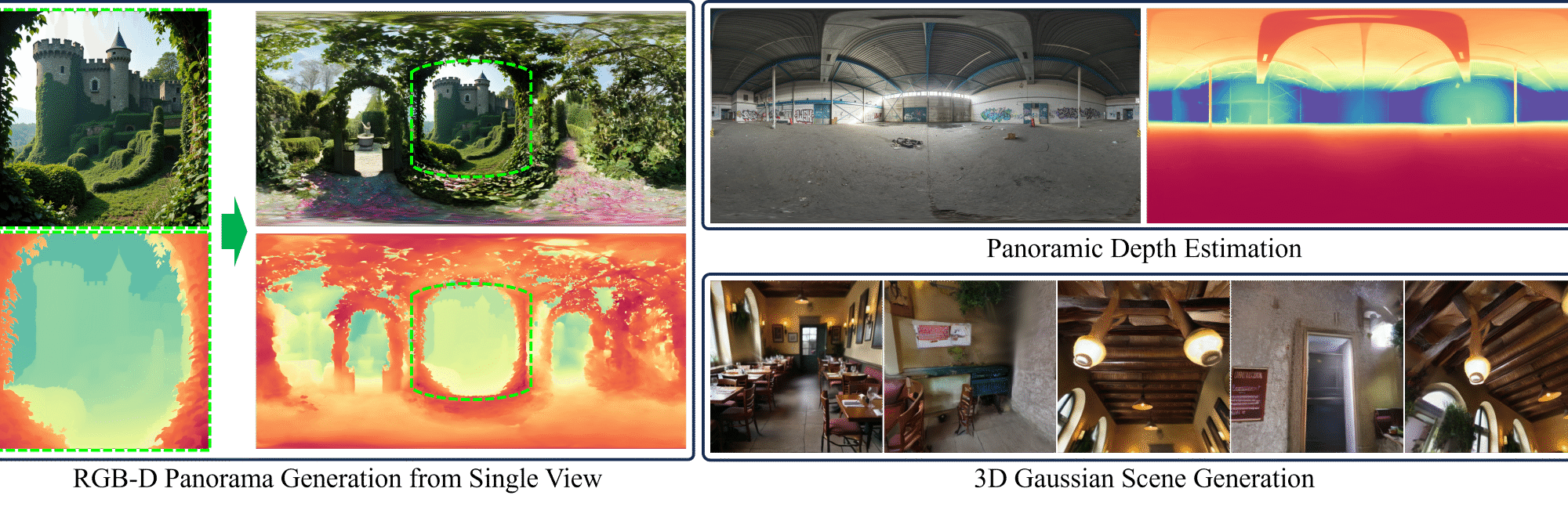

DreamCube is an innovative framework designed to generate high-quality 3D panoramic scenes from single-view RGB-D inputs and multi-view text prompts. This approach addresses the challenges of limited 3D panoramic data by leveraging pre-trained 2D foundation models. By applying Multi-plane Synchronization, DreamCube adapts these 2D models for omnidirectional content generation, ensuring diverse appearances and accurate geometry while maintaining multi-view consistency.

🧩 Description

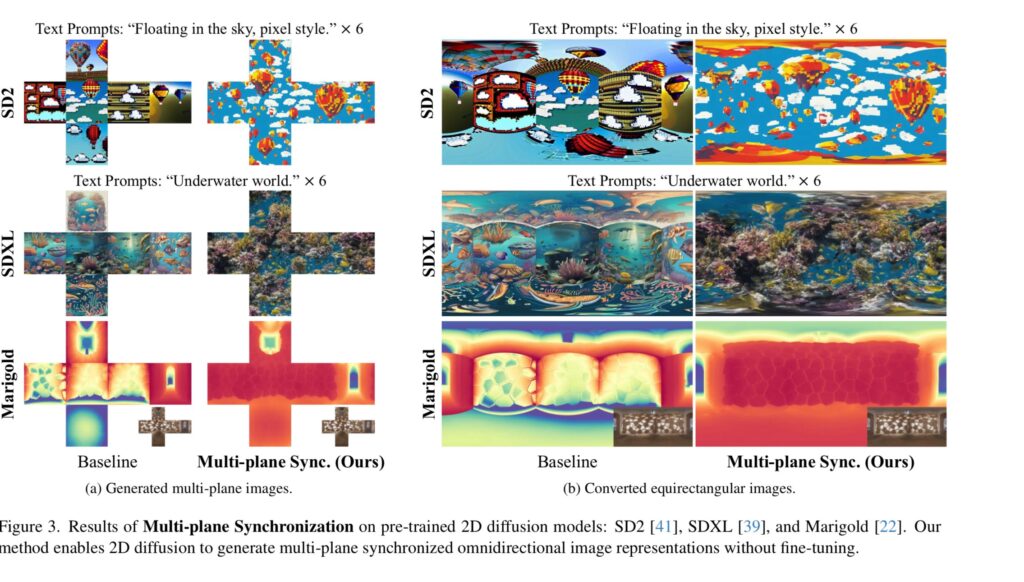

Multi-plane Synchronization is a novel technique introduced in DreamCube that synchronizes different 2D spatial operators—such as attentions, 2D convolutions, and group norms—to multi-plane panoramic representations (e.g., cubemaps). This synchronization enables seamless processing of cubemaps, facilitating tasks like RGB-D panorama generation, panorama depth estimation, and 3D scene generation.

DreamCube’s architecture maximizes the reuse of 2D foundation model priors, achieving high-fidelity and geometrically accurate 3D panoramas. Its capabilities extend beyond image generation to include depth estimation and 3D scene reconstruction, making it a versatile tool for various applications in virtual reality, gaming, and simulation.

👤 About the Creators

DreamCube was developed by a collaborative team of researchers from The University of Hong Kong, Tencent, and Astribot. The project was led by:

- Yukun Huang: Lead author and primary contributor to the development of DreamCube. His research focuses on generative models and 3D scene synthesis. He has been involved in several notable projects, including DreamComposer, which enhances view-aware diffusion models by injecting multi-view conditions.

- Yanning Zhou: Contributed to the development and implementation of the multi-plane synchronization technique, which is central to DreamCube’s functionality.

- Jianan Wang: Provided expertise in 3D scene generation and contributed to the integration of the model with various 3D datasets.

- Kaiyi Huang: Assisted in the optimization of the model’s performance and its application to real-world scenarios.

- Xihui Liu: Collaborated on the overall design and evaluation of the model, ensuring its effectiveness across different tasks.

Together, this team has advanced the field of 3D panorama generation by introducing innovative techniques that leverage existing 2D models for high-quality 3D content creation.

🆚 Comparison with Other Models

| Feature | DreamCube | CubeDiff | PanoFree |

|---|---|---|---|

| Input Type | Single-view RGB-D images & multi-view text prompts | Single-view images & text prompts | Multi-view images |

| Generation Approach | Multi-plane synchronization of 2D diffusion models to generate 3D panoramas | Adapts diffusion models to generate cubemaps by treating each face as a standard perspective image | Iterative warping and inpainting for multi-view image generation without fine-tuning |

| Multi-view Consistency | High | Moderate | High |

| Text Control | Implicit through multi-view prompts | Fine-grained per-face text control | Implicit |

| Depth Estimation | Yes | No | No |

| 3D Scene Generation | Yes | No | No |

| Open Source | Yes (GitHub) | Yes (GitHub) | Yes (GitHub) |

| Notable Strength | Seamless integration of 2D priors into 3D generation | High-resolution panorama generation with fine-grained text control | Efficient multi-view image generation without the need for fine-tuning |

DreamCube stands out for its ability to generate high-fidelity 3D panoramas by leveraging multi-plane synchronization, enabling the adaptation of 2D diffusion models for 3D content creation. This approach ensures diverse appearances and accurate geometry while maintaining multi-view consistency.

💻 Hardware and Software Requirements

To effectively run DreamCube, the following hardware and software specifications are recommended:

🖥️ Hardware Requirements

- Processor (CPU): Intel Core i7 or AMD Ryzen 7 (or equivalent)

- Memory (RAM): At least 32 GB

- Graphics Processing Unit (GPU): NVIDIA GeForce RTX 30xx series or higher with at least 8 GB VRAM

- Storage: Minimum 100 GB of free disk space

🛠️ Software Requirements

- Operating System: Linux (Ubuntu 20.04 or later)

- Python Version: 3.8 or higher

- Dependencies: PyTorch, CUDA (for GPU acceleration), and other relevant libraries as specified in the project’s documentation

Meeting these requirements will ensure optimal performance and efficiency when running DreamCube.

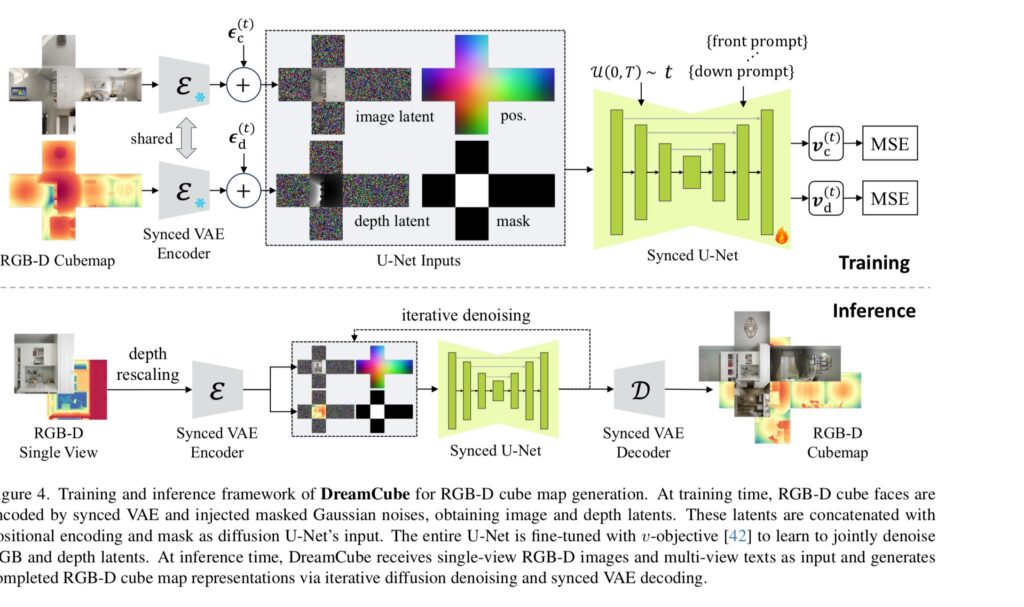

🧠 Model Working: DreamCube’s Architecture

DreamCube is a diffusion-based framework designed for 3D panorama generation. It leverages a technique called Multi-plane Synchronization to adapt 2D diffusion models for multi-plane panoramic representations, such as cubemaps. This adaptation enables the generation of high-quality and diverse omnidirectional content from single-view RGB-D inputs and multi-view text prompts.

🔄 Multi-plane Synchronization

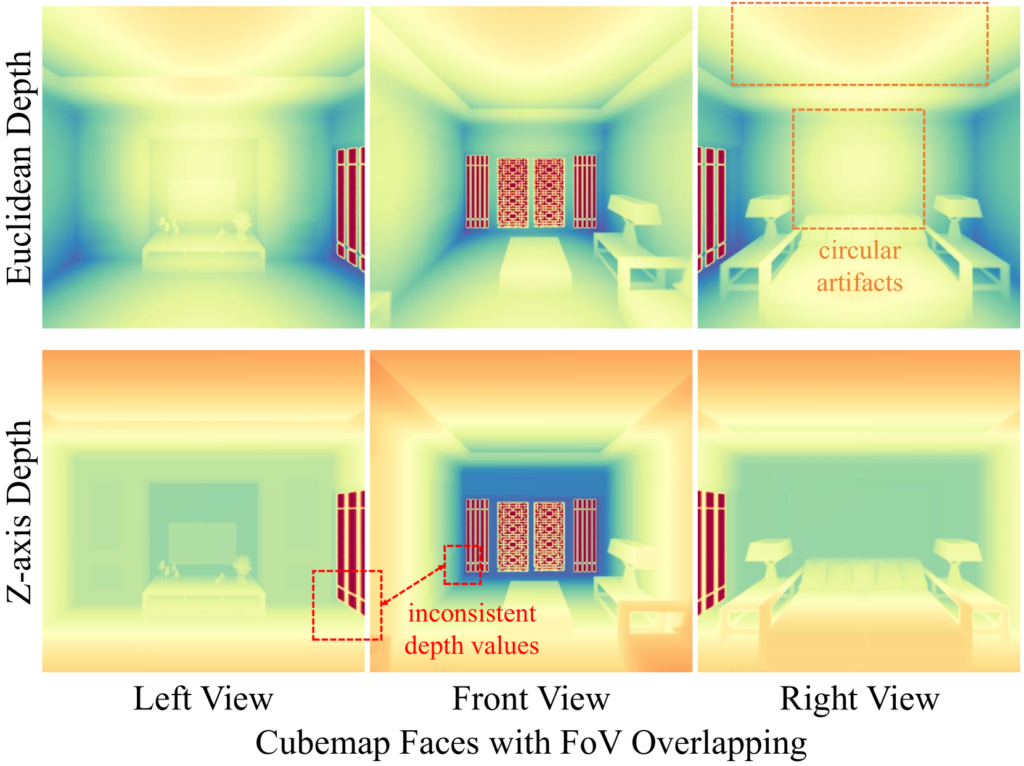

Multi-plane Synchronization involves synchronizing different 2D spatial operators—like attention mechanisms, 2D convolutions, and group normalization—across multiple planes of a cubemap. This approach ensures that the model can process cubemaps seamlessly, facilitating tasks such as RGB-D panorama generation, depth estimation, and 3D scene reconstruction.

🖼️ RGB-D Cubemap Generation

Building upon Multi-plane Synchronization, DreamCube generates RGB-D cubemaps by conditioning on single-view RGB-D inputs and multi-view text prompts. This method allows the model to produce panoramic images with accurate depth information, enabling applications in virtual reality, gaming, and simulation.

🛠️ Installation Guide

To set up and run DreamCube, follow these steps:

1. Clone the Repository

Begin by cloning the official DreamCube repository:

git clone https://github.com/Yukun-Huang/DreamCube.git

cd DreamCube

2. Set Up the Environment

Create a virtual environment to manage dependencies:

python -m venv dreamcube-env

source dreamcube-env/bin/activate # On Windows, use `dreamcube-env\Scripts\activate`

Install the required dependencies:

pip install -r requirements.txt

3. Download Pretrained Models

Download the necessary pretrained models and place them in the appropriate directories as specified in the repository’s documentation.github.com

4. Run the Model

After setting up the environment and downloading the models, you can run the DreamCube model using the provided scripts or through the Gradio interface for interactive use.

For more detailed information and updates, refer to the official DreamCube GitHub repository and the project page.

🔮 Future Work

While DreamCube represents a significant advancement in 3D panorama generation, several avenues for future research and development remain:

- Dynamic Scene Generation: Extending DreamCube to handle dynamic scenes, incorporating temporal information to generate 4D panoramas, could enhance its applicability in virtual reality and gaming. arxiv.org

- Improved Depth Estimation: Enhancing the accuracy of depth estimation in generated panoramas would contribute to more realistic 3D reconstructions.

- Interactive Controls: Implementing more intuitive user interfaces for real-time editing and manipulation of generated panoramas could broaden DreamCube’s usability.

- Cross-Domain Generalization: Training DreamCube on diverse datasets to improve its performance across various domains and environments.

✅ Conclusion

DreamCube introduces a novel approach to 3D panorama generation by applying Multi-plane Synchronization to adapt 2D diffusion models for omnidirectional content creation. This method facilitates the generation of high-quality, diverse, and geometrically accurate 3D panoramas from single-view RGB-D inputs and multi-view text prompts. Extensive experiments demonstrate its effectiveness in panoramic image generation, depth estimation, and 3D scene reconstruction. DreamCube’s innovative architecture and open-source implementation provide a valuable tool for researchers and practitioners in the fields of computer vision and graphics.

📚 References

- Huang, Y., Zhou, Y., Wang, J., Huang, K., & Liu, X. (2025). DreamCube: 3D Panorama Generation via Multi-plane Synchronization. arXiv preprint arXiv:2506.17206. Retrieved from https://arxiv.org/abs/2506.17206

- Kalischek, N., Oechsle, M., Manhardt, F., Henzler, P., Schindler, K., & Tombari, F. (2025). CubeDiff: Repurposing Diffusion-Based Image Models for Panorama Generation. arXiv preprint arXiv:2501.17162. Retrieved from https://arxiv.org/abs/2501.17162

- Wu, Z., Li, Y., Yan, H., Shang, T., Sun, W., Wang, S., Cui, R., Liu, W., Sato, H., & Li, H. (2024). BlockFusion: Expandable 3D Scene Generation using Latent Tri-plane Extrapolation. arXiv preprint arXiv:2401.17053. Retrieved from https://arxiv.org/abs/2401.17053

- Cao, A., & Johnson, J. (2023). HexPlane: A Fast Representation for Dynamic Scenes. arXiv preprint arXiv:2301.09632. Retrieved from https://arxiv.org/abs/2301.09632

- Liu, A., Li, Z., Chen, Z., Li, N., Xu, Y., & Plummer, B. (2024). PanoFree: Tuning-Free Holistic Multi-view Image Generation with Cross-view Self-Guidance. Proceedings of the European Conference on Computer Vision (ECCV). Retrieved from https://eccv2024.ecva.net/virtual/2024/session/94

- Xu, A., Ling, Y., & Zhang, Z. (2024). 4K4DGen: Panoramic 4D Generation at 4K Resolution. arXiv preprint arXiv:2406.13527v1. Retrieved from https://arxiv.org/abs/2406.13527v1

- Li, L., Zhang, Z., Li, Y., Xu, J., Hu, W., Li, X., Cheng, W., Gu, J., Xue, T., & Shan, Y. (2025). NVComposer: Boosting Generative Novel View Synthesis with Multiple Sparse and Unposed Images. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Retrieved from https://cvpr.thecvf.com/virtual/2025/day/6/13

- Kant, Y., Siarohin, A., Wu, Z., Vasilkovsky, M., Qian, G., Ren, J., Guler, R. A., Ghanem, B., Tulyakov, S., & Gilitschenski, I. (2023). SPAD: Spatially Aware Multi-View Diffusers. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Retrieved from https://cvpr2023.thecvf.com/virtual/2024/session/32085