🤗 LongWriter-Zero ✍️: Harnessing Reinforcement Learning for Ultra-Long Text Generation

Introduction:

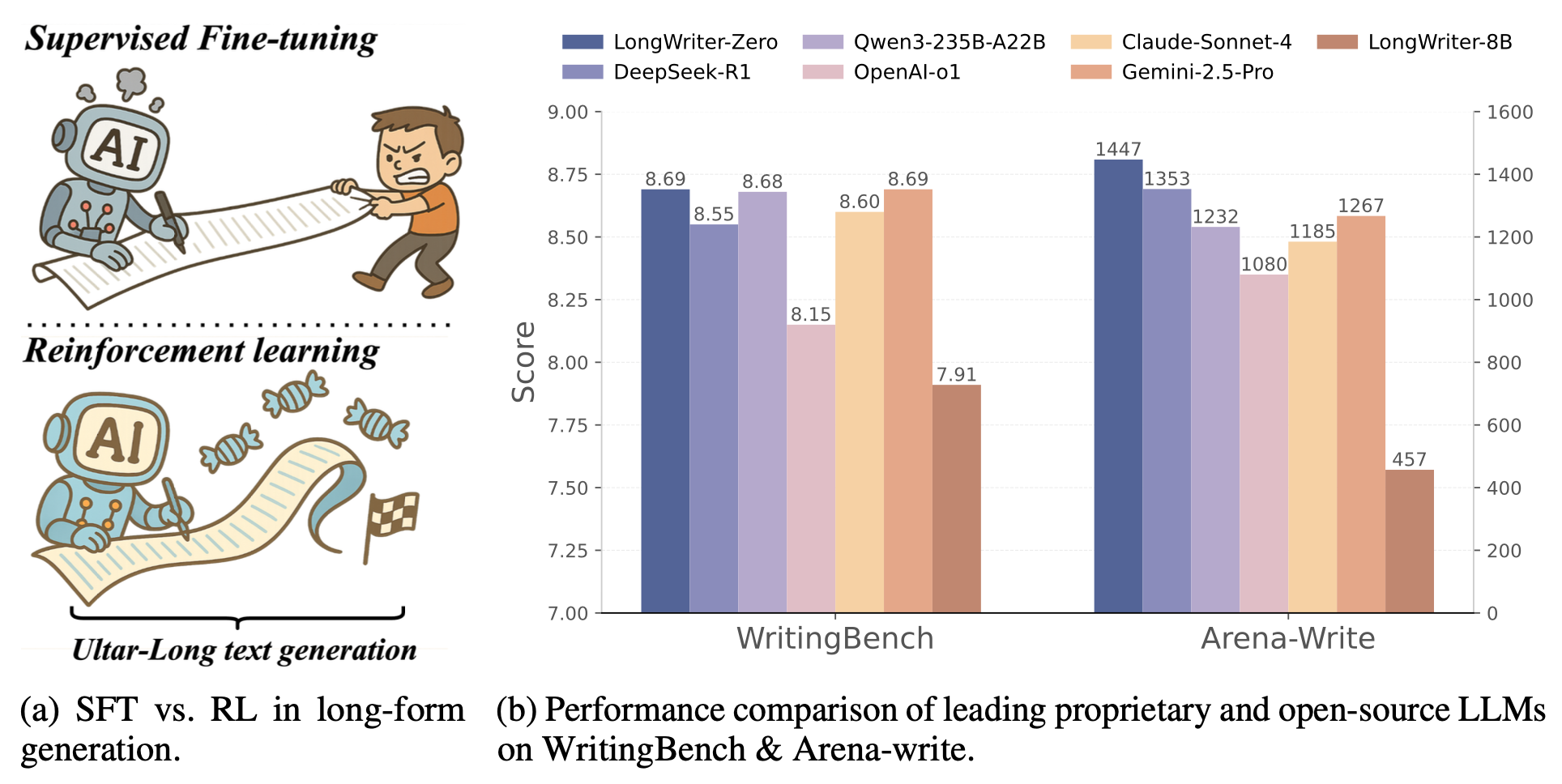

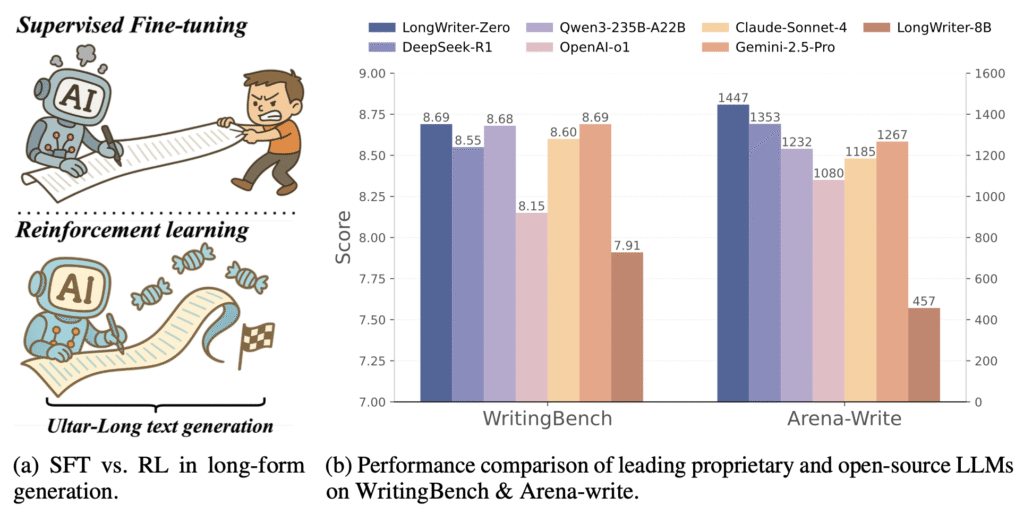

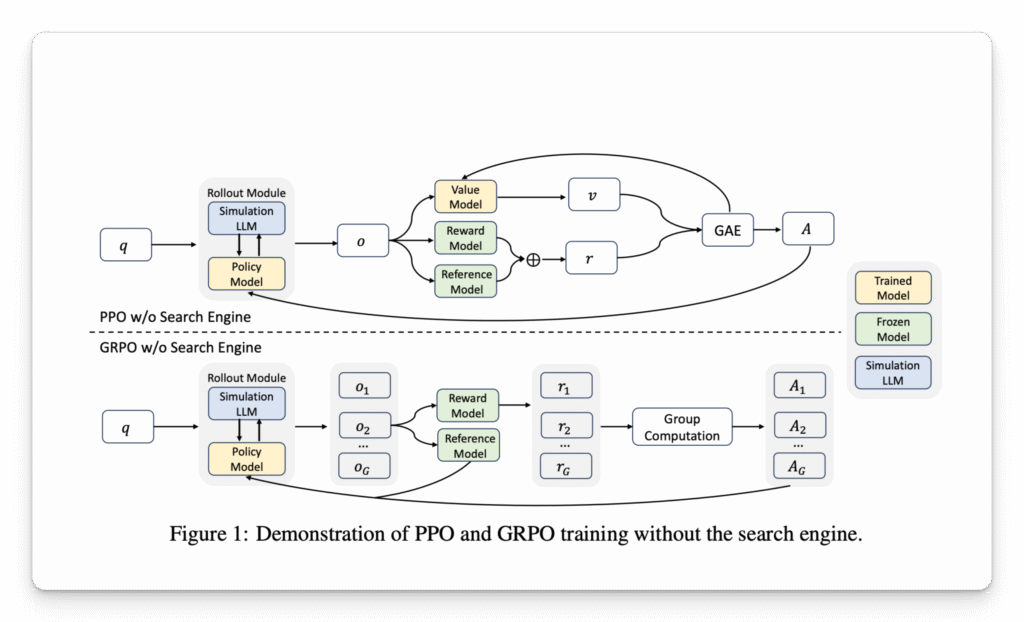

In the realm of natural language processing, generating coherent and contextually rich content over extended passages has long been a formidable challenge. Traditional language models often falter when tasked with producing ultra-long texts, typically due to limitations in training data and inherent architectural constraints. Enter LongWriter-Zero, a groundbreaking model developed by researchers at Tsinghua University’s KEG Lab. This 32-billion parameter model employs a novel reinforcement learning (RL)-only approach, distinguishing itself by not relying on supervised fine-tuning (SFT) or synthetic datasets. Instead, LongWriter-Zero leverages Group Relative Policy Optimization (GRPO), an extension of Proximal Policy Optimization (PPO), to iteratively refine its text generation capabilities. The model’s training process incorporates a composite reward function that evaluates outputs based on length, writing quality, and structural integrity, ensuring the production of long-form content that is both fluent and logically coherent. With the ability to generate passages exceeding 10,000 tokens, LongWriter-Zero sets a new benchmark in the field of ultra-long text generation, offering promising applications across various domains, including content creation, academic writing, and technical documentation.

👩💻 Dr. Yushi Bai

Dr. Yushi Bai is a leading researcher in the field of natural language processing and reinforcement learning. He has significantly contributed to the development of LongWriter-Zero, focusing on innovative approaches to ultra-long text generation. His work emphasizes the application of reinforcement learning techniques to enhance the coherence and quality of generated content over extended passages.

👨💻 Dr. Jiajie Zhang

Dr. Jiajie Zhang is a prominent figure in AI research, particularly in the areas of large language models and their applications. He has collaborated extensively on the LongWriter-Zero project, contributing to the model’s architecture and training methodologies. His expertise has been instrumental in advancing the capabilities of LongWriter-Zero in generating high-quality, long-form text.

👩💻 Dr. Xin Lv

Dr. Xin Lv specializes in deep learning and its applications in natural language processing. As part of the LongWriter-Zero development team, she has focused on optimizing model performance and ensuring the generation of coherent and contextually relevant long-form content. Her contributions have been vital in refining the model’s ability to handle extended text sequences effectively.

👨💻 Dr. Linzhi Zheng

Dr. Linzhi Zheng is an expert in machine learning with a focus on language models. He has played a key role in the development of LongWriter-Zero, particularly in the areas of model training and evaluation. His work has helped in achieving significant improvements in the model’s ability to generate ultra-long, high-quality text.

👩💻 Dr. Siqi Zhu

Dr. Siqi Zhu’s research interests lie in the intersection of reinforcement learning and natural language generation. She has contributed to the LongWriter-Zero project by developing novel training strategies that enhance the model’s performance in generating coherent and engaging long-form content.

👨💻 Dr. Lei Hou

Dr. Lei Hou is a researcher with expertise in large-scale language models and their applications. He has been involved in the LongWriter-Zero project, focusing on optimizing the model’s architecture and training processes to improve its ability to generate ultra-long text sequences.

👩💻 Dr. Yuxiao Dong

Dr. Yuxiao Dong specializes in machine learning and natural language processing. As part of the LongWriter-Zero team, she has contributed to the development of techniques that enhance the model’s ability to generate coherent and contextually appropriate long-form text.

👨💻 Dr. Jie Tang

Dr. Jie Tang is a renowned researcher in the field of artificial intelligence, with a focus on large language models and their applications. He has led the LongWriter-Zero project, guiding the team in developing innovative approaches to ultra-long text generation using reinforcement learning.

👩💻 Dr. Juanzi Li

Dr. Juanzi Li is an expert in artificial intelligence and natural language processing. She has contributed to the LongWriter-Zero project by providing insights into model evaluation and ensuring the generation of high-quality, coherent long-form text.

These researchers, primarily from Tsinghua University’s Knowledge Engineering Group (KEG Lab), have collectively advanced the field of ultra-long text generation through their work on LongWriter-Zero. Their innovative approach, which utilizes reinforcement learning without relying on synthetic fine-tuning data, has set a new benchmark in the development of large language models capable of producing coherent and contextually relevant long-form content.

🔍 Model Comparison: LongWriter-Zero vs. GPT-3, GPT-4, and GPT-4o

| Feature | 🤗 LongWriter-Zero ✍️ | 🤖 GPT-3 | 🤖 GPT-4 | 🤖 GPT-4o |

|---|---|---|---|---|

| Parameters | 32B | 175B | Not disclosed | Not disclosed |

| Training Approach | Reinforcement Learning | Supervised Fine-Tuning | Reinforcement Learning | Reinforcement Learning |

| Context Length | 10,000+ tokens | 2,049 tokens | 8,192 tokens | 128,000 tokens |

| Multimodal Support | No | No | Yes (GPT-4 Vision) | Yes (Text, Image, Audio) |

| Output Control | Length, Quality, Format | Limited | Enhanced | Enhanced |

| Performance (WritingBench Score) | 8.69 | Not applicable | 8.16 | Not applicable |

| Performance (Arena-Write Elo Rating) | 1447 | Not applicable | Not applicable | Not applicable |

Key Insights:

- Training Approach: LongWriter-Zero employs a reinforcement learning-only approach, distinguishing it from GPT-3 and GPT-4, which utilize supervised fine-tuning

- Context Length: LongWriter-Zero supports ultra-long text generation, handling over 10,000 tokens, surpassing the context lengths of GPT-3 and GPT-4.

- Performance Benchmarks: In the WritingBench benchmark, LongWriter-Zero achieved a score of 8.69, outperforming GPT-4o, which scored 8.16.

- Multimodal Capabilities: GPT-4o offers multimodal support, including text, image, and audio processing, a feature not available in LongWriter-Zero.

In summary, LongWriter-Zero excels in generating ultra-long, coherent text with advanced output control, setting a new standard in the field of natural language processing.

🖥️ Hardware & Software Requirements

Hardware:

- Recommended: 64 GB RAM or more for optimal performance.longwriterzero.com

- Storage: Approximately 20 GB of disk space for the model.longwriterzero.com

- GPU: A GPU with at least 24 GB VRAM is recommended for efficient inference.

Software:

- Operating System: Linux (Ubuntu preferred) or Windows.

- Python Version: Python 3.8 or higher.

- Dependencies: Install the necessary Python libraries:huggingface.co+5github.com+5github.com+5

bashCopyEdit pip install transformers torch

⚙️ Installation & Deployment

Option 1: Hugging Face Model Hub (Recommended for Quick Start)

- Install the required libraries:

pip install transformers torch

- Load the model in your Python environment:

from transformers import AutoTokenizer, AutoModelForCausalLM tokenizer = AutoTokenizer.from_pretrained("THU-KEG/LongWriter-Zero-32B") model = AutoModelForCausalLM.from_pretrained("THU-KEG/LongWriter-Zero-32B") prompt = "Write a comprehensive guide about..." inputs = tokenizer(prompt, return_tensors="pt") outputs = model.generate(**inputs, max_length=32000)

Option 2: Ollama (Local Deployment)

- Install Ollama from

- Pull the LongWriter-Zero model:longwriterzero.com

ollama pull gurubot/longwriter-zero-32b

- Run the model locally:

llama run gurubot/longwriter-zero-32b

Option 3: Quantized Models for Lower Resource Usage

- For reduced memory usage, consider using quantized versions of the model:

- GGUF Format: Available in various quantization levels (e.g., Q4_K_S, Q5_K_M).huggingface.co

- Usage: Refer to the Hugging Face repository for detailed instructions on loading and using these quantized models.huggingface.co

🔓 Open Source Details

- License: LongWriter-Zero is open-source and freely available for both research and commercial use.

- Model Weights: Access the pre-trained model weights on the Hugging Face Model Hub.

- Documentation: Comprehensive documentation, including usage examples and deployment guides, is available on the official website.

- Community Support: Engage with the community and contribute to the project via the GitHub repository.

🔮 Future Work

While LongWriter-Zero represents a significant advancement in ultra-long text generation, several avenues remain for further enhancement:

- Adversarial and Uncertainty-Aware Reward Models: Developing more robust reward models that can handle adversarial inputs and account for uncertainties in text generation.

- Expanded Instructional Diversity: Incorporating a broader range of instructional prompts to improve the model’s adaptability across various domains.

- Enhanced Agent-Environment Interaction: Integrating deeper interactions between the model and its environment to facilitate dynamic, multi-stage composition processes.

These directions aim to refine the model’s capabilities, ensuring more coherent, contextually accurate, and diverse long-form content generation.

✅ Conclusion

LongWriter-Zero marks a paradigm shift in the realm of ultra-long text generation. By employing a reinforcement learning-only approach, it sidesteps the limitations of traditional supervised fine-tuning, enabling the generation of coherent and high-quality content spanning up to 32,000 tokens. Its innovative use of composite reward models and structured planning mechanisms ensures that the generated text maintains logical flow and thematic consistency. This model not only sets a new benchmark for long-form content generation but also opens new possibilities for applications in academic writing, technical documentation, and creative storytelling.

📚 References

- Wu, Y., Bai, Y., Hu, Z., Lee, R. K., & Li, J. (2025). LongWriter-Zero: Ultra-Long Text Generation via Reinforcement Learning. arXiv. Retrieved from https://arxiv.org/abs/2506.18841emergentmind.com

- Bai, Y., Zhang, J., Lv, X., Zheng, L., Zhu, S., Hou, L., Dong, Y., Tang, J., & Li, J. (2024). LongWriter: Unleashing 10,000+ Word Generation from Long Context LLMs. arXiv. Retrieved from https://arxiv.org/abs/2408.07055medium.com+3arxiv.org+3unite.ai+3

- The Moonlight. (2025). Literature Review: LongWriter-Zero: Mastering Ultra-Long Text Generation via Reinforcement Learning. Retrieved from https://www.themoonlight.io/review/longwriter-zero-mastering-ultra-long-text-generation-via-reinforcement-learningthemoonlight.io

- EmergentMind. (2025). LongWriter-Zero Model: RL-Only Ultra-Long Text Generation. Retrieved from https://www.emergentmind.com/articles/longwriter-zero-modelemergentmind.com

- The Decoder. (2025). Researchers train AI to generate long-form text using only reinforcement learning. Retrieved from https://the-decoder.com/researchers-train-ai-to-generate-long-form-text-using-only-reinforcement-learning/the-decoder.com