🖼️ Native-Resolution Image Synthesis: Generating Any Resolution Image from a Text Prompt

🧠 Introduction

In the realm of generative modeling, the ability to synthesize high-quality images across diverse resolutions and aspect ratios has been a significant challenge. Traditional models often rely on fixed-resolution inputs, limiting their flexibility and scalability.

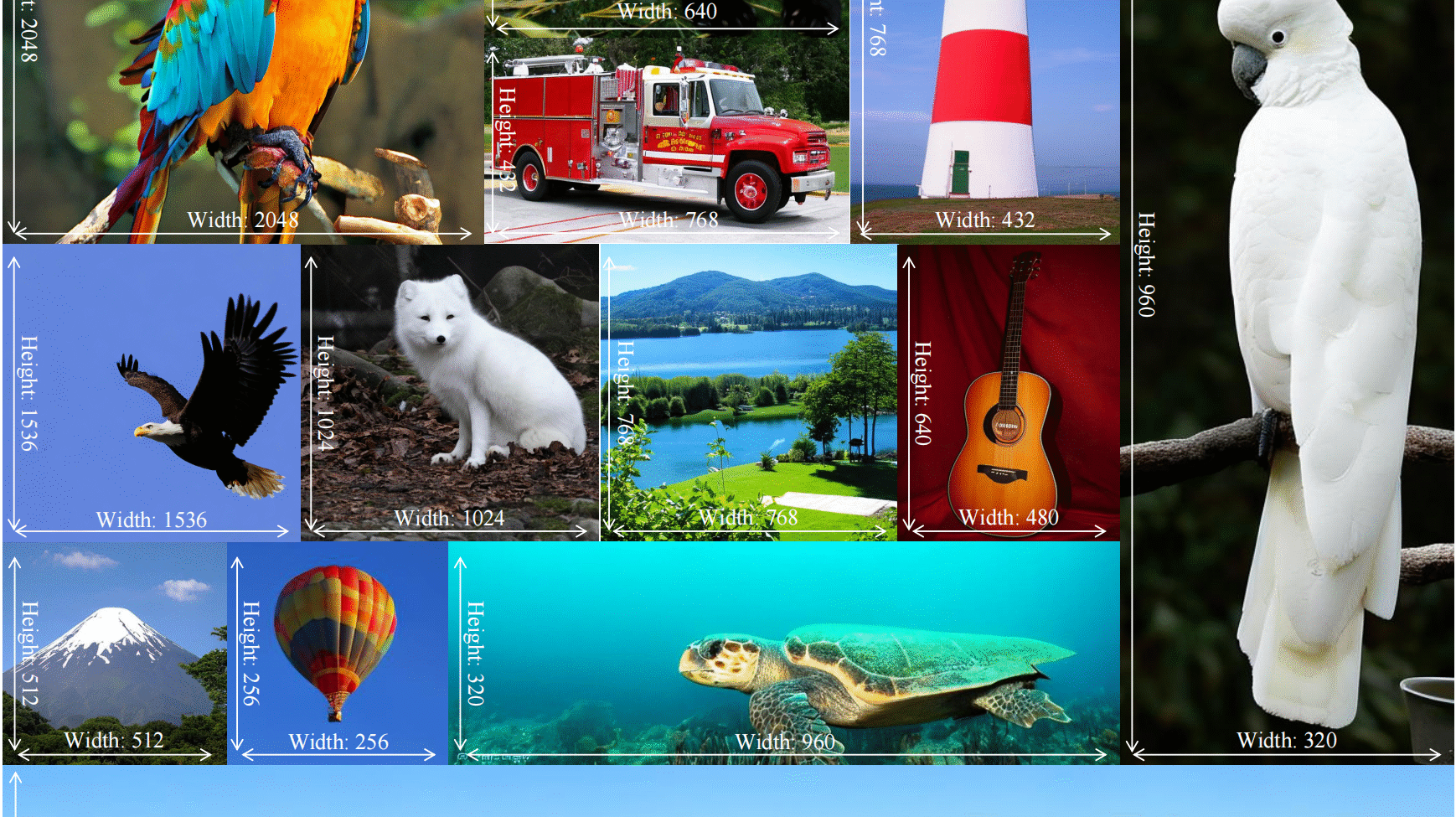

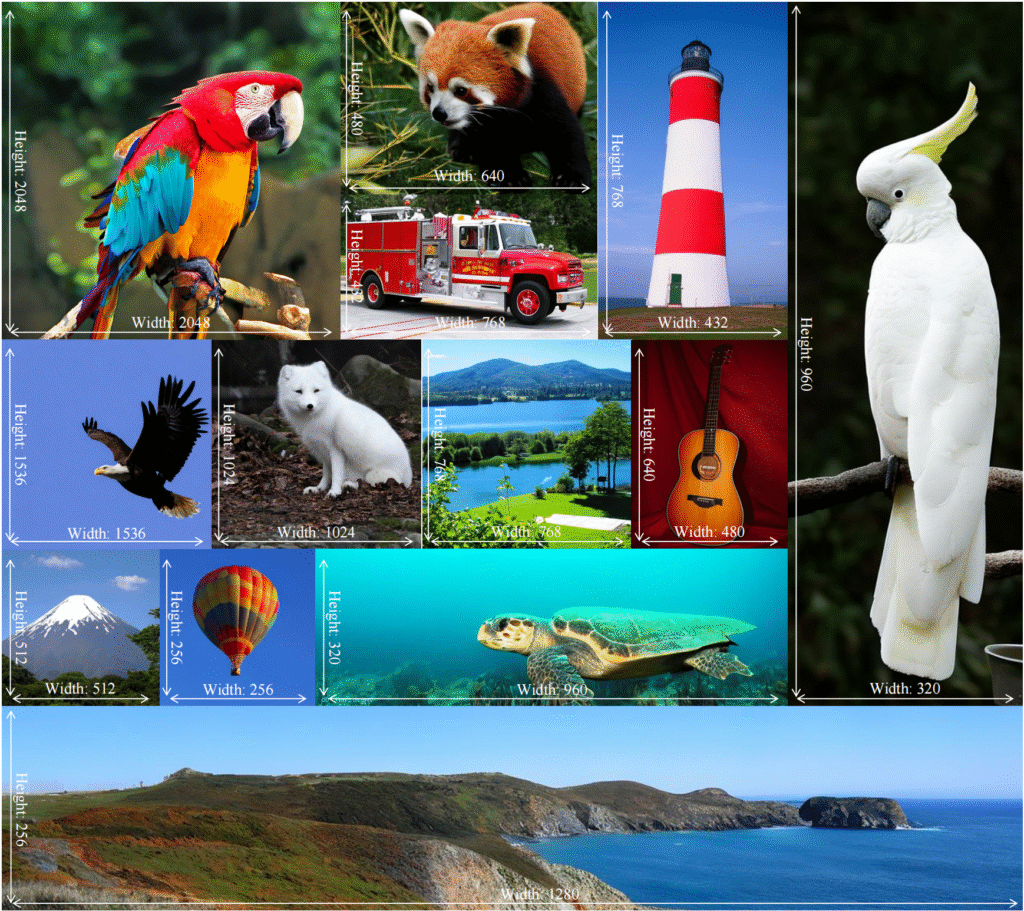

Enter the Native-resolution Diffusion Transformer (NiT), a novel architecture designed to explicitly handle varying resolutions and aspect ratios within its denoising process. By learning intrinsic visual distributions from images spanning a broad range of resolutions, NiT overcomes the constraints of fixed formats, offering a more adaptable approach to image synthesis.

In this blog, we delve into the mechanics of NiT, exploring how its design facilitates high-fidelity image generation across multiple resolutions, from $256 \times 256$ to $1024 \times 1024$ and beyond. We also examine its performance on the ImageNet benchmark, highlighting its state-of-the-art results and the implications for future generative models.

Join us as we explore the capabilities of NiT and its potential to revolutionize the field of image synthesis.

Background: Image Synthesis and Diffusion Models

Diffusion models have become a dominant approach in image synthesis, generating high-fidelity images by progressively denoising random noise into structured images. While powerful, most diffusion models are trained on images of a single fixed resolution. This constraint limits their flexibility, forcing practitioners to either train separate models for different resolutions or rely on expensive post-processing techniques.

These limitations affect not only training costs but also generalization—the ability to generate images in sizes and shapes unseen during training.

Introducing Native-resolution Diffusion Transformer (NiT)

The NiT model introduces a fresh approach: it explicitly learns to denoise images at varying resolutions and aspect ratios as part of its training. Instead of focusing on a fixed image size, NiT embraces resolution variability as a core design principle.

This allows NiT to:

- Efficiently train on multiple resolutions simultaneously

- Generalize to new, unseen resolutions and aspect ratios

- Achieve superior image quality across different sizes

In essence, NiT natively understands the concept of “image size” in its denoising process, enabling flexible and high-quality synthesis.

Technical Approach

NiT is built on a Transformer architecture optimized for diffusion-based image generation. Key innovations include:

- Resolution-aware denoising: During training, NiT receives images of different sizes and learns to denoise them within a unified framework.

- Adaptive positional encoding: The model uses a flexible positional encoding scheme that adapts to varying image dimensions.

- Efficient training pipeline: By jointly training across multiple resolutions, NiT reduces redundancy and boosts learning efficiency.

This combination results in a model capable of generating images at any resolution without needing separate training or fine-tuning steps.

Performance and Benchmarks

NiT achieves state-of-the-art results on classic benchmarks such as class-guided ImageNet generation:

| Resolution | FID Score (Lower is Better) | Notes |

|---|---|---|

| 256×256 | 2.08 | SOTA at this resolution |

| 512×512 | 1.48 | SOTA at this resolution |

| 1024×1024 | 4.52 | High-resolution generalization |

| 432×768 (custom) | 4.11 | Arbitrary aspect ratio |

Compared to traditional diffusion models, NiT’s ability to generalize without retraining at novel sizes marks a significant breakthrough.

Applications and Use Cases

NiT’s flexibility unlocks numerous practical applications:

- High-resolution digital art: Artists can generate ultra-high-res images directly from text prompts.

- Custom aspect ratio content: Create banners, posters, or wallpapers in any size without cropping or distortion.

- Efficient content generation: Media creators can quickly prototype assets in multiple sizes using a single model.

This versatility empowers creators and businesses to push creative boundaries while optimizing computational resources.

Limitations and Future Directions

While NiT sets new standards, some challenges remain:

- FID scores increase for very high resolutions (e.g., 1024×1024), indicating room for further quality improvements.

- Model size and computational demand could grow with more diverse resolution training.

Future work may focus on improving scaling behavior, reducing resource costs, and exploring NiT’s application to video or 3D content synthesis.

🛠️ Installation Guide

Getting started with Native-resolution diffusion Transformer (NiT) is straightforward. Here’s a quick guide to set up the environment and run the model:

1. Clone the Repository

git clone https://github.com/your-repo/NiT.git

cd NiT

2. Set Up the Python Environment

It’s best to create a virtual environment:

python -m venv nit-env

source nit-env/bin/activate # On Windows: nit-env\Scripts\activate

3. Install Dependencies

pip install -r requirements.txt

The requirements typically include PyTorch, transformers, and other diffusion-related libraries.

4. Download Pretrained Models

Download pretrained NiT weights for various resolutions:

bash scripts/download_weights.sh

5. Run Inference

Generate images from text prompts at any resolution:

python generate.py --prompt "A futuristic city skyline" --resolution 512 512

6. Training (Optional)

If you want to train or fine-tune NiT:

python train.py --dataset imagenet --resolutions 256 512 1024

🎯 Future Goals

NiT is a powerful step forward, but there are exciting directions ahead to further enhance native-resolution image synthesis:

- Improve High-Resolution Quality: Narrow the quality gap at ultra-high resolutions like 1024×1024 and beyond.

- Optimize Efficiency: Reduce computational requirements and training time, making NiT accessible for wider use.

- Extend to Video and 3D: Adapt the native-resolution approach to dynamic and volumetric content generation.

- Better User Controls: Introduce fine-grained control over composition, style, and semantic aspects beyond resolution.

- Open-Source and Community Expansion: Foster contributions and real-world applications by releasing tools, datasets, and tutorials.

These goals aim to push NiT from a research breakthrough into a versatile tool for creators everywhere.

Conclusion

The Native-resolution diffusion Transformer (NiT) redefines what’s possible in text-to-image generation by natively supporting varying resolutions and aspect ratios within a single model. It delivers state-of-the-art results efficiently and generalizes to new sizes without retraining. NiT opens exciting new avenues for creative AI, enabling flexible, high-quality image synthesis at any resolution.

References & Further Reading

- [Native-resolution diffusion Transformer (NiT) paper (link)]

- ImageNet dataset

- Diffusion models overview