🕶️ PlayerOne: Immersive Egocentric World Simulation from Image

You can simulate yourself from images and interact using this AI

🌐 Introduction

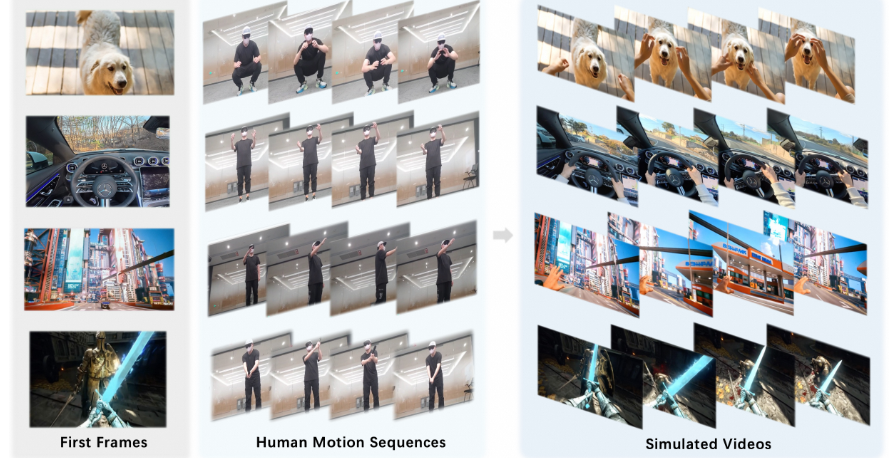

PlayerOne: Egocentric World Simulator marks a groundbreaking advancement in immersive virtual reality (VR) and computer vision. Developed by researchers from The University of Hong Kong, Alibaba DAMO Academy, and Hupan Lab, PlayerOne is the first egocentric realistic world simulator that enables users to explore dynamic environments from a first-person perspective.

🧠 What Is PlayerOne?

PlayerOne allows users to input an egocentric scene image, which the system then uses to reconstruct a corresponding 3D world. It generates egocentric videos that align with real-world human motion captured by an exocentric camera. This process involves a two-stage training pipeline:

- Coarse-Level Understanding: Pretraining on large-scale egocentric text-video pairs to grasp basic scene structures.

- Fine-Tuning: Refining the model using synchronous motion-video data from egocentric-exocentric video datasets.

Additionally, PlayerOne incorporates a part-disentangled motion injection scheme, allowing precise control over individual body movements, and a joint reconstruction framework that models both 4D scenes and video frames to ensure long-term consistency in video generation.

🔍 Key Features

- First-Person Perspective Simulation: Offers an immersive experience by simulating environments from the user’s viewpoint.

- Dynamic Scene Reconstruction: Accurately reconstructs 3D worlds from 2D images, enhancing realism.

- Motion Alignment: Aligns generated videos with real-world human motion, ensuring authenticity.

- Part-Level Motion Control: Enables detailed control over specific body parts, enhancing interaction.

🚀 Applications and Implications

PlayerOne’s innovative approach has significant implications for various fields:

- Virtual Reality (VR) and Augmented Reality (AR): Enhances user immersion by providing realistic simulations.

- Human-Computer Interaction (HCI): Improves interaction techniques by accurately modeling human movements.

- Robotics: Assists in training robots to understand and replicate human actions.

- Entertainment and Gaming: Offers new avenues for game design and interactive storytelling.

🎯 Purpose

PlayerOne aims to revolutionize immersive virtual experiences by enabling users to generate dynamic, egocentric videos from a single scene image. Unlike traditional VR simulations that require extensive hardware setups, PlayerOne allows for the creation of realistic 3D worlds that align with real-world human motion. This innovation opens up new possibilities in fields such as virtual reality, gaming, and digital content creation.

🌐 Online Try

While PlayerOne’s core functionalities are accessible through its GitHub repository, users can also explore its capabilities via the official project page. Here, you can view demonstrations of motion-video alignment and comparisons with previous models. These resources provide a comprehensive understanding of PlayerOne’s potential in transforming virtual simulations.

🧠 Technical Background

PlayerOne employs a sophisticated two-stage training pipeline to achieve its groundbreaking results:

- Coarse-Level Understanding: Pretraining on large-scale egocentric text-video pairs to grasp basic scene structures.

- Fine-Tuning: Refining the model using synchronous motion-video data from egocentric-exocentric video datasets.

Additionally, PlayerOne incorporates a part-disentangled motion injection scheme for precise control over individual body movements and a joint reconstruction framework that models both 4D scenes and video frames to ensure long-term consistency in video generation.

🧠 Technical Details

PlayerOne is an advanced egocentric world simulator that enables users to generate realistic 3D environments and videos from a single egocentric image. The system employs a two-stage training pipeline:

- Coarse-Level Understanding: Pretraining on large-scale egocentric text-video pairs to grasp basic scene structures.

- Fine-Tuning: Refining the model using synchronous motion-video data from egocentric-exocentric video datasets.

Additionally, PlayerOne incorporates a part-disentangled motion injection scheme for precise control over individual body movements and a joint reconstruction framework that models both 4D scenes and video frames to ensure long-term consistency in video generation.

💻 Hardware Requirements

To run PlayerOne efficiently, the following hardware specifications are recommended:

- Processor (CPU): Intel Core i9-9900 or better

- Graphics Card (GPU): NVIDIA RTX 2080Ti or better

- Memory (RAM): 32GB or more

- Storage: NVMe SSD for faster data access

These specifications ensure smooth performance, especially when handling large-scale simulations and high-resolution video generation.

🛠️ Software Requirements

PlayerOne operates within a specific software environment to ensure compatibility and optimal performance:

- Operating System: Windows 10 64-bit or Linux (Ubuntu recommended)

- Programming Language: Python 3.8 or higher

- Deep Learning Frameworks:

- PyTorch 1.10 or higher

- TensorFlow 2.5 or higher

- Additional Libraries:

- NumPy

- OpenCV

- Matplotlib

- SciPy

- CUDA Toolkit: CUDA 11.2 or higher (for GPU acceleration)

- cuDNN: cuDNN 8.1 or higher

These software components facilitate the training and inference processes, enabling efficient computation and rendering of 3D environments.

🔧 Installation Steps for PlayerOne

| Step | Description |

|---|---|

| Clone the Repository | Access the official GitHub repository at https://github.com/yuanpengtu/PlayerOne and clone it to your local machine. |

| 2. Set Up the Environment | Ensure you have Python 3.8 or higher installed. Create a virtual environment to manage dependencies. Install required libraries, which may include PyTorch, TensorFlow, NumPy, OpenCV, and others as specified in the repository. |

| 3. Download Pretrained Models | Obtain the pretrained models from the official sources or links provided in the repository. |

| 4. Run the Demo | Follow the instructions in the repository to run the demo, which typically involves providing an egocentric scene image and executing a script to generate the corresponding 3D world and video. |

| 5. Fine-Tuning (Optional) | If you wish to fine-tune the model on your own dataset, follow the guidelines provided in the repository for data preparation and training procedures. |

⚖️ Comparison with Other Models

| Feature | PlayerOne | EgoAgent | Diffusion Models | WISA |

|---|---|---|---|---|

| Input | Egocentric scene image | Egocentric video frames | Text-image pairs | Structured physical information |

| Output | Egocentric 3D world and video | Predicted future states in egocentric videos | High-quality images | Text-to-video generation |

| Training Data | Egocentric text-video pairs, egocentric-exocentric video datasets | Ego4D dataset | Large-scale text-image pairs | Structured physical datasets |

| Architecture | Two-stage pipeline with part-disentangled motion injection and joint reconstruction framework | Transformer-based model | Denoising process | Decoupling method integrating physical principles |

| Performance | Demonstrates great generalization ability in precise control of varying human movements and world-consistent modeling of diverse scenarios | Achieves competitive performance in predicting future states in egocentric videos | Excels in generating high-quality images but may not capture temporal dynamics as effectively | Focuses on generating videos that comply with physical laws |

| Applications | Virtual reality, augmented reality, human-computer interaction, robotics, entertainment, and gaming | Future state prediction in egocentric videos | Image generation | Text-to-video generation |

🏁 Conclusion

PlayerOne represents a pioneering advancement in the realm of immersive virtual simulations. By enabling the generation of dynamic, egocentric videos from a single scene image, it offers a novel approach to world simulation. Its innovative two-stage training pipeline, incorporating pretraining on large-scale egocentric text-video pairs and fine-tuning on synchronous motion-video data, ensures high fidelity in motion-video alignment. The introduction of part-disentangled motion injection and a joint reconstruction framework further enhances its capability to model complex human movements and maintain scene consistency over time. Experimental results underscore its generalization ability and precise control over varying human movements, marking a significant step forward in egocentric real-world simulation. arxiv.org

🔮 Future Work

While PlayerOne has made substantial strides, several avenues remain for further enhancement:

- Real-Time Interaction: Integrating real-time user interactions to allow dynamic alterations in the simulated environment.

- Extended Dataset Integration: Incorporating additional diverse datasets to improve the model’s robustness and adaptability across various scenarios.

- Enhanced Motion Prediction: Developing more sophisticated algorithms for predicting complex human motions, thereby increasing the realism of the simulations.

- Cross-Domain Application: Exploring applications beyond entertainment, such as in education, training simulations, and virtual tourism, to broaden the impact of PlayerOne.

These directions aim to refine the capabilities of PlayerOne, making it a more versatile and immersive tool for various applications.

📚 References

- Tu, Y., Luo, H., Chen, X., Bai, X., Wang, F., & Zhao, H. (2025). PlayerOne: Egocentric World Simulator. arXiv. Retrieved from https://arxiv.org/abs/2506.09995arxiv.org+1playerone-hku.github.io+1

- Rodin, I., Furnari, A., Mavroedis, D., & Farinella, G. M. (2021). Predicting the Future from First Person (Egocentric) Vision: A Survey. arXiv. Retrieved from https://arxiv.org/abs/2107.13411arxiv.org

- PlayerOne FAQ. (2022). Retrieved from https://mirror.xyz/playeroneworld.eth/VfRRaVYW3cfU3wZ7–uaWXsrbl9AWINMuEeJxUAHO1gmirror.xyz+1mirror.xyz+1

- PlayerOne: How Everyone Can Build a Metaverse. (2022). Retrieved from https://mirror.xyz/playeroneworld.eth/WcuuKuplG-LH2uWULNf-i0xXJHDndlZZiervIhAcSXsmirror.xyz