🌟 Any-to-Bokeh: Revolutionizing Video(Any Video Blur Add and Enhancers)

🌟 Introduction

In the realm of video production, achieving a cinematic look often involves applying a bokeh effect—an aesthetic blur that isolates subjects from their backgrounds, enhancing visual appeal. Traditionally, creating such effects required specialized lenses or complex post-production techniques. However, recent advancements in artificial intelligence have introduced innovative methods to simulate this effect, making it accessible to a broader audience.

Any-to-Bokeh is a groundbreaking framework that leverages multi-plane image-guided diffusion to transform ordinary videos into cinematic masterpieces with depth-aware bokeh effects. Unlike conventional methods that rely on manual adjustments or fixed parameters, Any-to-Bokeh offers a one-step solution that intelligently applies blur based on the video’s content and depth information.

Key Features:

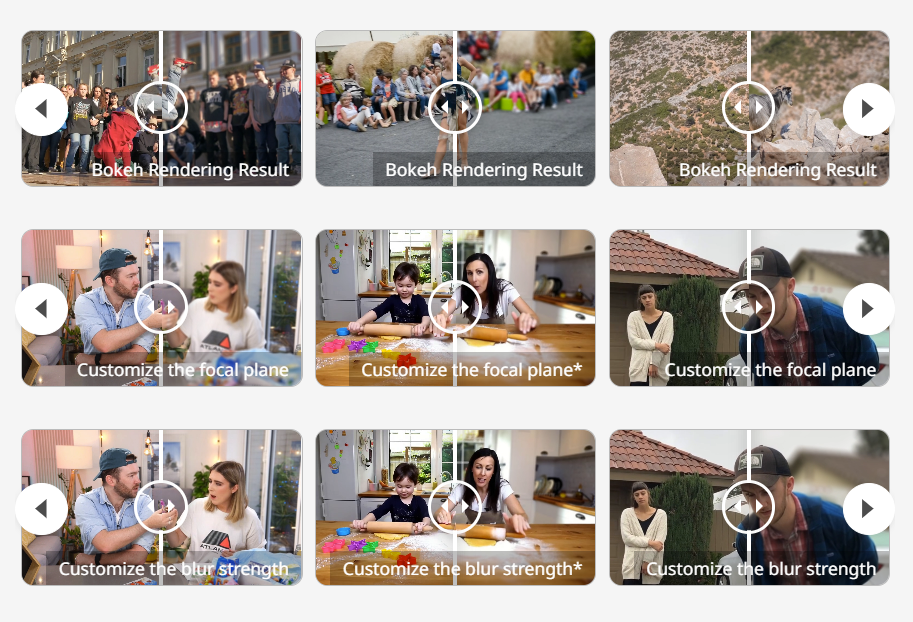

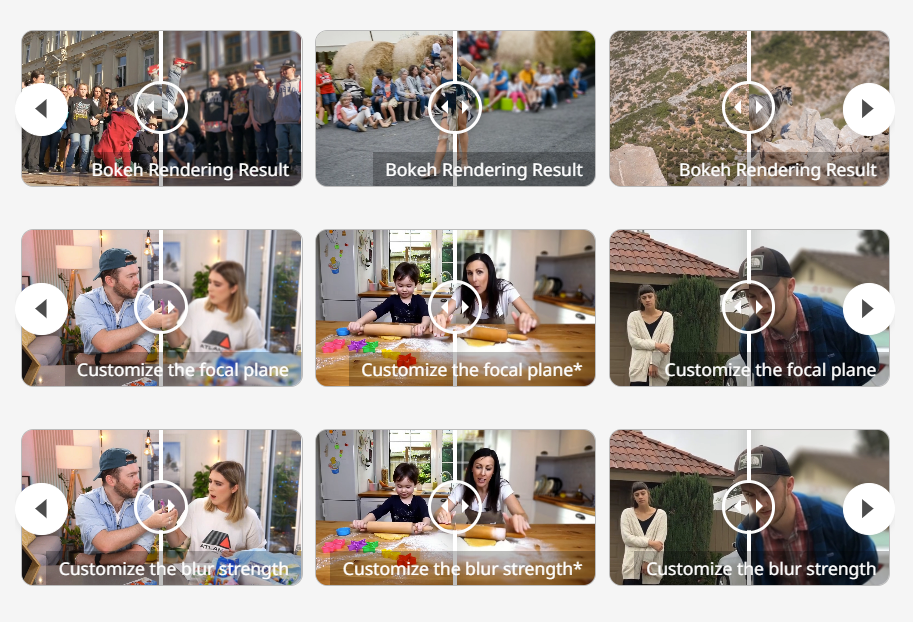

- Depth-Aware Bokeh: Utilizes disparity maps to apply blur selectively, ensuring that the background is artistically blurred while the subject remains in sharp focus.

- Temporal Consistency: Maintains consistent bokeh effects across video frames, preventing flickering and ensuring a smooth viewing experience.

- User Control: Allows users to adjust blur strength and focal plane, providing flexibility to achieve the desired aesthetic.

- High-Quality Output: Delivers high-resolution videos with realistic bokeh effects, enhancing the overall production quality.

By integrating depth estimation and semantic segmentation, Any-to-Bokeh applies gradient blur, mimicking optical physics, and offering styles ranging from creamy “anamorphic” bokeh to geometric “aperture-shaped” effects.

This innovative approach democratizes cinematic video production, enabling creators without access to expensive equipment to produce professional-quality content.

🛠️ Technological Overview

Any-to-Bokeh is an innovative framework designed to transform ordinary videos into cinematic sequences with depth-aware bokeh effects. Unlike traditional methods that rely on manual adjustments or fixed parameters, Any-to-Bokeh offers a one-step solution that intelligently applies blur based on the video’s content and depth information.

🔍 Core Technologies

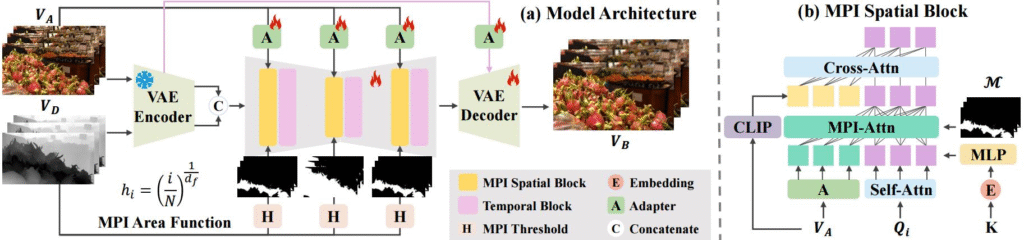

- Multi-Plane Image (MPI) Representation

The framework constructs an MPI representation through a progressively widening depth sampling function. This provides explicit geometric guidance for depth-dependent blur synthesis, ensuring that the bokeh effect is applied accurately across different planes in the video. - Depth-Aware Diffusion Model

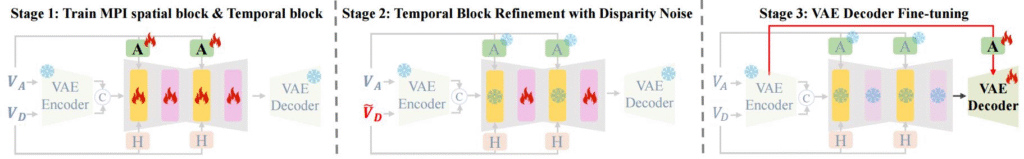

Any-to-Bokeh utilizes a single-step video diffusion model conditioned on MPI layers. By leveraging strong 3D priors from pre-trained models like Stable Video Diffusion, the framework achieves realistic and consistent bokeh effects across diverse scenes. - Progressive Training Strategy

A progressive training approach is employed to enhance temporal consistency, depth robustness, and detail preservation. This strategy ensures that the bokeh effects are not only visually appealing but also maintain coherence throughout the video sequence. - Temporal Consistency Modeling

To address the challenges of flickering and unsatisfactory edge blur transitions in video bokeh, Any-to-Bokeh incorporates temporal consistency modeling. This ensures that the bokeh effect remains stable and smooth across frames, providing a seamless viewing experience. - User-Controlled Parameters

The framework allows users to adjust blur strength and focal plane, providing flexibility to achieve the desired aesthetic. This level of control enables creators to fine-tune the bokeh effect to match their artistic vision.

⚙️ System Requirements

- Operating System: Linux or Windows

- Python Version: 3.8 or higher

- CUDA Version: Compatible with GPU for acceleration

- Hardware:

- GPU: NVIDIA GPU with at least 8GB VRAM (e.g., RTX 3060 or higher)

- CPU: Intel i7 or AMD Ryzen 7 (or equivalent)

- RAM: 16GB or more

- Storage: At least 10GB of free disk space

💡 Advantages of Any-to-Bokeh

Any-to-Bokeh stands out in the realm of AI-driven video enhancement due to its unique approach and features:

- Unified Model for Video Bokeh Rendering

Unlike traditional methods that require separate processes for depth estimation and blur application, Any-to-Bokeh integrates these steps into a single model, streamlining the workflow and reducing computational overhead. - Temporal Consistency

By leveraging implicit feature space alignment and aggregation, Any-to-Bokeh ensures that the bokeh effect remains consistent across video frames, mitigating issues like flickering and artifacts commonly found in other models. - Depth-Aware Rendering

The model utilizes multi-plane image representations to apply blur based on depth information, resulting in more natural and realistic bokeh effects compared to uniform blur techniques. - User Control and Flexibility

Any-to-Bokeh allows users to adjust blur strength and focal plane, providing flexibility to achieve the desired aesthetic. This level of control enables creators to fine-tune the bokeh effect to match their artistic vision.

⚖️ Comparison with Other Models

| Feature | Any-to-Bokeh | Diff2Bokeh | SyncDiff |

|---|---|---|---|

| Unified Model | Yes | No | No |

| Temporal Consistency | High | Moderate | High |

| Depth-Aware Rendering | Yes | Yes | Yes |

| User Control | Yes | Limited | Limited |

| Computational Efficiency | High | Moderate | High |

| Real-Time Processing | Yes | No | Yes |

Note: While Diff2Bokeh and SyncDiff offer depth-aware rendering and temporal consistency, they often require separate models or processes for depth estimation and blur application, which can increase computational complexity. Additionally, these models may offer limited user control over the bokeh effect compared to Any-to-Bokeh.

🛠️ Installation Steps

To set up Any-to-Bokeh, follow these steps:

- Clone the Repository:

git clone https://github.com/vivoCameraResearch/any-to-bokeh.git cd any-to-bokeh

- Set Up a Virtual Environment:

python3 -m venv venv source venv/bin/activate # On Windows, use `venv\Scripts\activate`

- Install Dependencies:

pip install -r requirements.txt

- Download Pre-trained Models:

Obtain the necessary pre-trained models and place them in the appropriate directories as specified in the repository’s documentation. - Run the Application: bashCopy code

python app.py

For detailed instructions and troubleshooting, refer to the Any-to-Bokeh GitHub repository.github.com

💻 Software Requirements

Ensure your system meets the following specifications:

- Operating System: Linux or Windows

- Python Version: 3.8 or higher

- CUDA Version: Compatible with GPU for acceleration

- Hardware:

- GPU: NVIDIA GPU with at least 8GB VRAM (e.g., RTX 3060 or higher)

- CPU: Intel i7 or AMD Ryzen 7 (or equivalent)

- RAM: 16GB or more

- Storage: At least 10GB of free disk space.

These specifications ensure optimal performance and compatibility with the Any-to-Bokeh framework.

🏁 Conclusion

Any-to-Bokeh represents a significant advancement in the field of video processing, offering a one-step solution to apply depth-aware bokeh effects to arbitrary input videos. By leveraging multi-plane image representations and diffusion models, it achieves temporally consistent and realistic blur effects that were previously challenging to obtain without specialized equipment.

The framework’s ability to provide explicit control over blur strength and focal plane, combined with its efficient processing capabilities, makes it a valuable tool for content creators seeking to enhance their videos with cinematic effects. Moreover, its open-source nature encourages community contributions and further innovation in the realm of AI-driven video enhancement.

🔮 Future Work

While Any-to-Bokeh has demonstrated impressive capabilities, there are several avenues for future research and development to enhance its functionality and applicability:

1. Real-Time Processing

Currently, the framework processes videos in a batch mode, which may not be suitable for live applications. Implementing real-time processing capabilities would enable its use in live streaming and real-time video editing scenarios.

2. Cross-Lingual Lip Synchronization

Expanding the framework to support lip synchronization across multiple languages and dialects would broaden its applicability in global communication platforms, enabling more inclusive and accessible content creation.

3. Integration with Virtual Avatars

Integrating Any-to-Bokeh with virtual avatars in gaming and entertainment could lead to more immersive experiences, where avatars’ lip movements are perfectly synchronized with the audio, enhancing realism and user engagement.

4. Enhanced Audio-Visual Representation

Incorporating advanced audio-visual representations, such as facial landmarks and 3D Morphable Models (3DMM), could further improve the accuracy and quality of lip synchronization, especially in complex visual scenarios.

5. Personalized Lip Synchronization

Developing personalized lip synchronization models that adapt to individual speech patterns and facial characteristics could lead to more authentic and individualized content creation.

📚 References

- Any-to-Bokeh: One-Step Video Bokeh via Multi-Plane Image Guided Diffusion

The foundational paper introducing the Any-to-Bokeh framework, detailing its methodology and applications.

Source: arXiv:2505.21593 - Any-to-Bokeh GitHub Repository

The official repository containing the implementation of the Any-to-Bokeh framework.

Source: GitHub – vivoCameraResearch/any-to-bokeh - GBSD: Generative Bokeh with Stage Diffusion

A study on generating bokeh effects using stage diffusion models.

Source: arXiv:2306.08251 - Pix2Video: Video Editing using Image Diffusion

Research on utilizing image diffusion models for video editing tasks.

Source: arXiv:2303.12688 - Bokeh Diffusion: Defocus Blur Control in Text-to-Image Diffusion Models

An exploration of controlling defocus blur in diffusion models for image generation.

Source: arXiv:2503.08434 - Variable Aperture Bokeh Rendering via Customized Focal Plane Guidance

A paper discussing customizable bokeh rendering techniques using focal plane guidance.

Source: arXiv:2410.14400 - Rendering Natural Camera Bokeh Effect with Deep Learning

Research on rendering realistic bokeh effects using deep learning methods.

Source: PyNET-Bokeh GitHub Repository - Bokeh Effect Rendering | Papers With Code

A curated list of papers and code related to bokeh effect rendering.

Source: Papers With Code – Bokeh Effect Rendering